1. Introduction

Nowadays, several fields of Special Education are using virtual teaching with advanced technologies. In fact, researchers, developers’ systems, and educators are working hard to provide innovative and useful tools that offer immediate assistance to patients with special needs as evidenced in (Bilotta, Gabriele, Servidio, & Tavernise, 2010; Chen, Chen, Wang, & Lemon, 2010; Porayska-Pomsta et al., 2012). In particular, these works have specific expertise in planning educational activities to support individuals with Autism Spectrum Disorders (ASD).

In this context, different studies confirm that individuals with ASD have the ability for using and interpreting satisfactorily mobile applications and virtual environments (Parsons, Mitchell, & Leonard, 2004; Saiano et al., 2015; Stichter, Laffey, Galyen, & Herzog, 2013). Nevertheless, most of the ASD subjects have difficulty to understand the mental and emotional state of other persons around them (Uljarevic & Hamilton, 2013). For this reason, some studies have been carried out to analyze the identification of emotions in individuals with ASD, even inside of social situations (Bertacchini et al., 2013; Harms, Martin, & Wallace, 2010; Kim et al., 2014). However, some of these studies have been only tested up to 10 levels of intensity for each emotion (Kim et al., 2014).

When talking about emotions recognition, it is inevitable to mention the psychologist Paul Ekman due to his studies on categorization of “Basic Emotions”. Ekman describes both the characteristics used in distinguishing one emotion from another and the characteristics shared by all emotions (Paul Ekman, 1984, 1992, 1999a; Paul Ekman & Davidson, 1994). In this context, Ekman & Friesen were the pioneers in the development of measurement systems for facial expression (P Ekman & Friesen, 1976). This procedure is now known as Facial Action Coding System (FACS). It describes any facial movement in terms of minimal Action Units (AUs) based on anatomical analysis. The AUs are the specific facial muscle movements, and the measurement is a descriptive analysis of their behavior (P Ekman, 1982; Stewart Bartlett, Hager, Ekman, & Sejnowski, 1999). The FACS basically can differentiate all possible visually distinguishable facial movements.

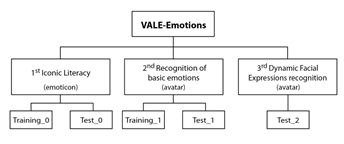

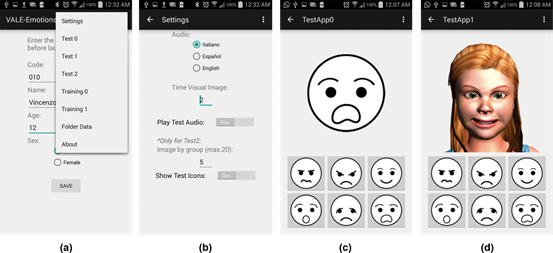

In the light of this, an application (App) devoted to support teaching and learning activities on emotions recognition for individuals with ASD is reported in this work. This tool is part of an authoring Virtual Advanced Learning Environment (VALE). The App, nominated VALE-Emotions, has been developed for the Android mobile platform and consists of three educational levels (see Figure 1). At a first glance, it is aimed for stimulating and facilitating the understanding and recognition of six basic facial emotions: Joy, Sadness, Anger, Fear, Disgust, and Surprise (Paul Ekman, 1999a). Such emotions have been digitally modeled using avatars, following an authoring experimental set of parameters based on two settled codifications: 1) Motion Picture Experts Group - v4 (MPEG-4) and 2) Facial Action Coding System (FACS). In a certain case of this study, each emotion presents a sequence of 100 pictures (intensity levels) producing a Dynamic Facial Expressions (DFE) collection.

To assess the effectiveness of VALE-Emotions App, two hypotheses were proposed. The first one assumes high general success emotions recognition of High Functional (HF)-ASD subjects. The second one assumes that it is possible to determine the intensity level at which an effective recognition (≥50%) of emotions inside the DFE series occurs. In this way, training and test procedures through VALE-Emotions were implemented using mobile devices in real scenarios with HF-ASD participants to collect relevant information for the evaluation of the App functionality and hypothesis. Furthermore, the interaction with such mobile devices allows for the application’s portability and touch sense experiences. In fact, this is an advantage of VALE-Emotions App since it has been demonstrated that mobile technologies enhance manipulation, eye-hand coordination, perception and intuition (Bertacchini et al., 2013; Kim et al., 2014; Porayska-Pomsta et al., 2012).

The rest of this work is organized as follows: in section 2, the background of the VALE-Emotions’ expressions generation is outlined, including an explanation of the authoring DFE codification. Also, descriptions of the App interfaces and experimentation methodology are performed. Finally, in section 3, the validation of the App and hypothesis will be discussed through the results.

2. Methodology

2.1 Emotional Expression Codification

An authoring parameterization for facial expressions applied to avatars is introduced in this section. It is based in two settled codes which are also reviewed below.

2.1.1. MPEG-4 Codification System

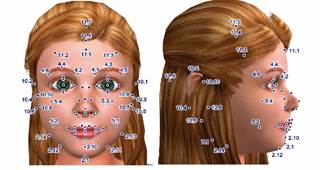

The human avatars were linked to the Face and Body Animation (FBA) international standard as part of the MPEG-4 ISO/IEC-14496 (1999). In fact, the MPEG-4 FBA provides the details for the animation of human or human-like characters through the Face Animation Parameters (FAPs) and Body Animation Parameters (BAPs). Continuing with the FAPs, these are composed by two high-level FAPs and 66 low-level parameters drawn from the study of minimal facial actions. In fact, these parameters are closely related to facial muscle actions (Giacomo, Joslin, Garchery, & Magnenat-Thalmann, 2003). Therefore, the FAPs and their respective range of control parameter values describe the movement of facial parts in virtual human or human-like animations.

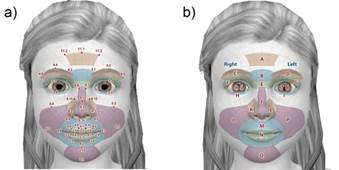

Moreover, the MPEG-4 includes the Facial Definition Parameters (FDPs), which in turn describes the Feature Points (FPs) related to the human face. In Figure 2, the FPs applied to the girl face avatar modeled for this study are presented. It is worth to mention that according to the MPEG-4, the FAPs control the key FPs action, which are used to produce animated vises and facial expressions, as well as head and eye movement (Moussa & Kasap, 2010).

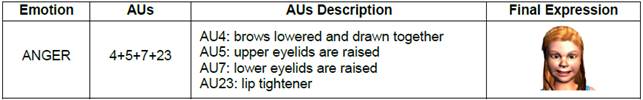

2.1.2. Facial Action Coding System (FACS)

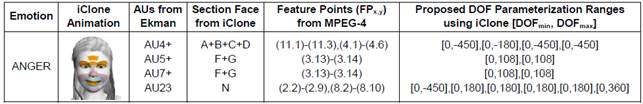

The other well diffused and practiced codification system used for representing natural expressions of human faces has been taken from the known studies of Paul Ekman (Paul Ekman, 1992, 1999a, 1999b, 2003). In such studies, the encoding of 44 facial muscle’s movements that serve to generate any emotion are described. To detect such movements, Ekman has developed the human-observer-based system known as FACS. This coding system encodes muscles individually calling them Action Units (AUs). In Table 1, a description of the FACS and AUs applied to anger emotion is presented.

2.1.3. Authoring Parameterization Model

In this section, a parameterization model for emotional expression in virtual human faces is proposed. This model is based on frameworks of the FACS and MPEG-4 through the AUs and the FPs, respectively. At this purpose, the software called iClone was used to animate the six basic emotions through a virtual girl avatar. In this way, in the Figure 3a, it is possible to observe the FPs linked to the face sections defined by iClone for a girl avatar. On the other hand, the face sections of the avatar were associated to the AUs of Ekman as shown in the Figure 3b. It is worth to note that each face section was renamed using the labels from "A" to "U" to reference our parameterizing. An example of such relations for the anger emotion parameterization ranges are shown in Table 2.

Therefore, through iClone, several ranges of values called Degree-Of-Freedom (DOF) were stated for the FPs. Thus, every DOF is related with a specific FP and defines its value of translation or rotation movement, which is described by the AUs. In this way, the generation of the proposed DFE parameter values for each FP x.y was obtained by Equation1:

with i = 1,2,3···N, where DOF max and DOF min are the extreme values of the DOF’s range (see Table 2), and N is the number of images to compose the DFE sequence for each emotion (i.e. for anger emotion sequence of 100 images, the FP11.1 in the 50th image is -225). It is worth to mention that DOF min and/or DOF max can be numbers different than zero, positive or negative.

2.2. VALE-Emotions App Description

In particular, three different layouts interfaces and activities have been programmed for the VALE-Emotions App using Android Studio as described below.

2.2.1. Main Interface

The main interface of the VALE-Emotions App is shown in Figure 4a. The layout of this interface is formed by text and number fields for entering the patient data to be stored in the output results data file (e.g. Code, Name, Age, and Sex). Due to these data are important information for researching and diagnosis purposes, the activity of this interface controls that the fields have been saved by the user before launching the training or tests activities.

2.2.2. Menu and Settings Interface

All the activities can be entered from the menu option located in the top-right corner of the main interface (see Figure 4a). The “Settings” interface is shown in Figure 4b. In this activity, the feedback audio language for the training/test activities can be set among Italian, Spanish, and English. Also, the visualization time of each emotion expression displayed during training/test activities can be adjusted as well as the number of pictures generated by the DFE to be presented.

2.2.3. Interfaces of the Training and Test Activities

As mentioned in section 1, three different learning levels are enclosed into the VALE-Emotions App. The first activity is aimed for Icon Literacy of the patient due to a certain set of icons that is further used in the interaction with the next two activities. The standard icons used are extracted from the denominated “Sistemas Aumentativos y Alternativos de Comunicación” (SAAC, in Spanish). Thus, as shown in Figure 4c, the “Training 0” and “Test 0” activities trains and assesses the patient on the Icon Literacy supported by an audio feedback option, respectively. It is worth to note that the six basics emotions are associated with concrete and unique icons.

Fig. 4: VALE-Emotions App interfaces: a) Main and Menu interface, b) Settings interface, c) Iconic Literacy interface and d) Emotion Recognition interface for DFE in training/test 1 and 2.

Furthermore, the "Traning 1" and "Test 1" activities are aimed to practice and evaluate the recognition of the basic emotions expressed by virtual avatars. The expressions involved in these activities represent the highest intensity levels of the six basic emotions generated through the DFE (see Figure 4d). Finally, the activity "Test 2" is oriented to assess the recognition level of the patient among a set of twenty pictures for each basic emotions obtained through the DFE parameterization model presented in section 2.1.3. It is worth to mention that pictures are randomly displayed to avoid any particular relation between the order presentation and pictures sequence.

The data generated from the patient interaction with the training/test activities are saved in CSV files within the mobile device storage system into a folder named "VALE-Emotions Data V010".

2.3. Experimentation of VALE-Emotions in Special Education

The study was applied in an assistance center in the south of Italia (Crotone Region). The collaboration work was aimed to support learning activities of people with High-Functioning Autism Spectrum Disorder (HF-ASD). Several work meetings with these groups were maintained through personal interaction with the participants as shown in Figure 5.

2.3.1. Subjects

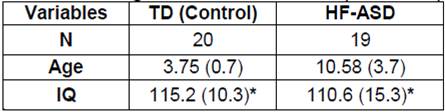

The first participants group was composed of 19 subjects (15 males (78.9%) and 4 females (21.1%)) aged between 5 and 18 years (M=10.58, SD=3.68) with HF-ASD diagnosis done by their assistance center. A second group of participants was composed of 20 Typically Development (TD) subjects as a control group (11 males (55%) and 9 females (45%)) aged between 3 and 5 years (M=3.75 (SD=0.72)). The requirements for the selection of subjects in each participant group were: 1) having the consent of parents or guardians, 2) not suffering any significant sensory or motor impairment, and 3) a full-scale IQ greater than 70. Unfortunately, IQ data of the TD and HF-ASD groups were not available until the redaction of this work. However, to complete the comparative information of Table 3, IQ values reported in literature of works with similar characteristics groups were used (Kim et al., 2014).

2.3.2. Procedure

Participants have been assessed individually in similar conditions. All the participants worked with the researchers using the VALE-Emotions App during three months since July 2015. All sessions were conducted by a researcher and took place in a familiar room for the participants. The room was equipped with adequate light conditions and without distractions. A professional by each center (educator or psychologist) was also present during the experimentation to avoid stress on the participants for being in front of unfamiliar people to them. Participants were asked to sit in a chair in front of a table upon which it was placed the Tablet running the App. In this experiment, the participants were asked to recognize six basic emotions (Anger, Disgust, Joy, Fear, Sadness and Surprise) each time these were displayed on the screen. The selection of the desired answer by the participants was done through iconic buttons pressing. The experimentation activities were performed in three levels. The first and second levels consisted of training and test activities while the third one only presented a test activity. Training/test stages lasted 15 minutes maximum and they are described below.

A) Iconic Literacy Stage

The main scope is to identify whether the user known the basic emotions represented in its simplest form called "emoticons." During the Training-0, the user pushes each of the six emoticon buttons found on the bottom of the screen (see Figure 4c). An enlarged image corresponding to the selected button is displayed in the center of the screen. At the same time, an auditory stimulus saying the emotion’s name is listened from the app. To complete this activity, the evaluator explains to the participant every expression in a detailed way. Thus, only in this training part, the participant received a gesture or physical guidance.

B) Recognition of Basic Emotions Stage

The purpose of this activity is to link the displayed emotion picture on the screen center with the corresponding iconic button (see Figure 4d). During the Training-1, the user touches each of the six buttons found on the bottom of the screen. At this time, an avatar face expression corresponding to the selected button is displayed, while an auditory stimulus says the emotion’s name. Also in this phase, the evaluator explains to the participant every shown expression. Afterward, in the Test-1 activity, the app displays the six avatar face emotions in a random way. The auditory stimulus is deactivated to allow an intuitive recognition of the emotions. The user is required to identify the emotion presented through the corresponding button touching. If the user correctly identifies the emotion, a positive visual stimulus symbol appears, and another random emotion is visualized. Conversely, if the button selected is incorrect, a negative visual stimulus symbol appears and the same emotion picture is visualized again until it is correctly identified. The results interactions are stored automatically. It is worth to mention that only the maximum emotional intensity of the DFE are presented for each emotion.

C) Dynamic Facial Expressions Recognition Stage

It is worth to note that this stage is the most important and representative for this study. It is intended to determine the level of effective recognition of intensity emotions generated by the DFE. These mentioned intensities correspond to each set of the parameterized images representing facial actions process to form a natural facial expression. Series of maximum 20 intensity expressions per emotion (max. 120 pictures) are randomly displayed in the center of the screen (see Figure 4d). The participant is required to recognize each emotion picture having only one chance to do it. Visual and audio stimulation are deactivated to assess the intuitive emotional recognition of the patients. This level of test must be applied only to patients who achieved more than 50% of correct answers in Test-1. It is worth to mention that VALE-Emotions tool controls that pictures are not presented repeatedly as well as adjusting the number of pictures to shown.

3. Results and Discussion

In this section, a descriptive analysis of data, from the above mentioned three stages, collected through of VALE-Emotion App experimentation is performed. The main dependent variable of the VALE-Emotion is the percentage of emotion recognition scores. This variable was individuated through the quantification of rights and wrongs answers obtained during each Test. Thus, the percentage of emotion success recognition of the six basic emotions analyzed for TD and HF-ASD groups are presented below. Moreover, comparison of the obtained results between the participant groups is performed. Furthermore, based on the experimental data, a generalized calculation of the effective recognition’s intensity level of emotional expressions generated by DFE is presented.

3.1. Iconic Literacy Results

The average percentage of success recognition of the six basic emotions represented by emoticons, obtained in the Tests-0 for each group, is summarized in Table 4. In the first stage, a high recognition of emotions in HF-ASD group was observed. This percentage recognition was higher than 80% for all emotions. This means that the iconic literacy campaign was successful for the participants of this group.

3.2. Recognition of Basic Emotions Results

Regarding this stage, the average percentage of success recognition of the six basic emotions during Test-1 is summarized in Table 5 for each participant group. Such emotions were animated in a girl avatar using the maximum intensity levels of the DFE.

For the HF-ASD group, the greater recognition was for the Joy emotion (M=92.11, SD=19.54), near followed by Anger (M=87.81, SD=28.12) and Surprise (M=85.09, SD=26.45) emotions. These results show a strong congruence with works reported in (Kim et al., 2014; Serret et al., 2014). This suggests that HF-ASD subjects often seem relatively similar to their counterparts regarding the recognition of emotions (Harms et al., 2010). This last statement is against the belief that children with HF-ASD often show impaired in emotion recognition (Kanner, 1943; Kim et al., 2014; Uljarevic & Hamilton, 2013).

Due to the inconsistencies presented by several researchers regarding the recognition of emotions in subjects with Autism, in the work reported in (Harms et al., 2010), it has been conducted detailed research that seeks to explain these conflicting results. The key seems to be in the use of compensatory mechanisms used by individuals with ASD (Grossman, Klin, Carter, & Volkmar, 2000; Vesperini et al., 2015). These mechanisms can be verbal mediation or feature-based learning. This statement is supported by the evidence found that people with ASD decode facial expressions differently than Typically Developing (TD) individuals (Fink, de Rosnay, Wierda, Koot, & Begeer, 2014). In this way, the largest children with HF-ASD often may submit equality relatively similar to that of their contemporaries with TD as regards the recognition of emotions. These arguments support the results obtained in the experimentation performed.

3.2.1. Determination of Response Time

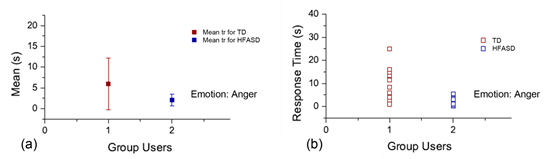

In order to determine other parameters to assess the recognition of the emotions in the Test-1 between the subjects inside of each group, it also has been considered the single time employed to perform the recognition of each expression. For this purpose, the response time value has been measured, which is the difference between the time registered when the subject touches the button on the screen and the time registered when the emotion was displayed on the screen. In one hand, the Mean Responses Time (MRT) and its Standard Deviation (SD) for Anger emotion from both participants groups are plotted in Figure 6a. On the other hand, the scattering of single responses time of all the participants is shown in Figure 6b. Thus, these information is summarized for each emotion in Table 6 considering each participant group.

Table 6 Mean Response Time of the hits recognition per participant groups and per emotion in Test-1.

| Group | ANGER | DISGUST | FEAR | JOY | SADNESS | SURPRISE | ||||||

| MRT [s] | SD | MRT [s] | SD | MRT [s] | SD | MRT [s] | SD | MRT [s] | SD | MRT [s] | SD | |

| TD | 5.972 | 6.175 | 6.097 | 5.130 | 8.661 | 7.248 | 5.094 | 7.124 | 5.924 | 5.789 | 4.958 | 4.291 |

| HF-ASD | 2.058 | 1.434 | 3.150 | 2.609 | 3.402 | 3.077 | 2.220 | 1.219 | 2.254 | 2.183 | 2.028 | 1.488 |

Fig. 6:. Single responses time of the hits recognition per participant groups for Anger emotion in Test-1.

It is worth to highlight that based on the data from Table 6, the participants of the HF-ASD group were those who more quickly recognized all the emotions during the evaluation of the Test-1. Also, it is worth noting that the spread of data was less for the same group, which means that the recognition was similar among participants who formed such group.

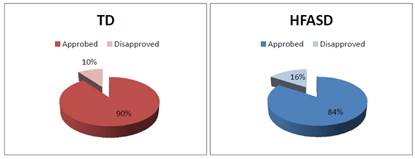

3.2.2. Screening of Rated Participants for Test-2

As mentioned in section 2.3.2-C, a screening to evaluate the subjects from each group that were able to perform the Test-2 was done. Regarding this evaluation, the discrimination criteria was that only those subjects that reached a higher evaluation than 50% in the average of successes emotion recognition in the Test-1 were able to continue with the Test-2. The success percentage average was determined through the addition of all the correct answers divided into the total number of interactions. The results of this screening process for each participant group are presented in the Figure 7. Thus, the 90% of TD participants group and the 84.2% of HF-ASD participants group were able to continue with the Test-2.

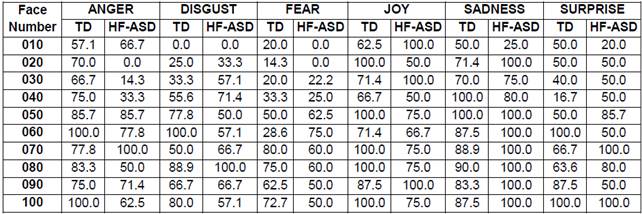

3.4. Dynamic Facial Expressions Recognition Results

The results obtained in the Test-2 are the strongest point of the present study. The main interest was to identify in which intensity level of the DFE sequence generation, for individual emotions, an acceptable recognition for each participant group was performed. Thus, an individual analysis of each emotion, considering the percentage of hits according to each intensity level (005, 010,···,100), per each participant group was done. Summary of these results are presented in Table 7 for avatar faces number: 010, 020, 030, 040, 050, 060, 070, 080, 090, and 100.

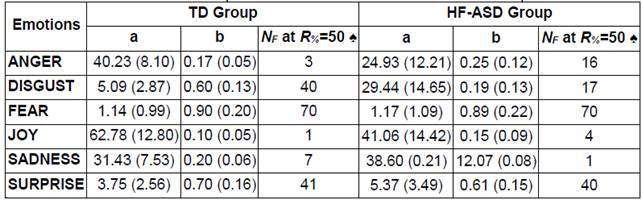

3.4.1. Determination of Intensity Levels

To objectively determine at which intensity level, an acceptable recognition for each emotion within a certain DFE sequence has occurred, a mathematical data analysis technique was used. The latter was based on obtaining certain parameters of a power curve fitting on the experimental data. An example of this procedure for Anger emotion is shown in Figure 8. Such fitting expression is defined as Equation 2

were:

R % : is the dependent variable representing the percentage of emotion recognition of the group,

N F : is the independent variable that can be experimentally adjusted and represents the intensity level of emotional expression, and

a and b: are the parameters that would best fit the function to the experimental data.

Fig. 8: Percentage recognition at different intensity levels for Anger emotion in Test-2 with power fitting curve on experimental data for a) TD and b) HF-ASD groups.

It is worth to mention that the power curve was selected to fitting the data because of its natural tendency to accept only positive values for the independent variable, and the output dependent variable can only report positive values depending on the fitting parameters a and b. In this study, these properties are important because the percentages of success recognition, as well as the intensity level of DFE sequence generation, are only positive values ranged between 0 and 100. Moreover, the fitting parameter b in the equation (2) determines the growing rate of the dependent variable (percentage of success recognition) as the function of the independent variable (intensity level of emotional expression) scaled by a. Numerical results of the fitting are presented in Table 8.

Table 8 Results from the power fitting applied to the Percentage of Success Recognition Results from the DFE evaluation performed on the TD and HF-ASD Groups

Furthermore, through a change of variable in equation 2, it is possible to approximate the intensity level inside the DFE sequence for each emotion at which the percentage of success recognition fills a predetermined criterion of 50% of hits by each participant group. The resulting expression for the evaluation of this criterion is Equation 3

Thus, the evaluation results of the equation 3 are also presented in Table 8 for the TD and HF-ASD groups, respectively. These results determine the single values of the intensity level by the emotions at which an acceptable recognition begun. It is worth to note that acceptable intensity level emotion recognition between the groups was reached since the first levels, regarding Joy and Sadness emotions.

At a first glance, analyzing the results presented in Table 8, the Joy emotion seems to be recognized from the firs intensity level (i.e. face 1) for the TD group. It was followed by the Anger emotion which was identified from the level 3 (i.e. face 3). Similarly, the Sadness emotion had an acceptable recognition since the intensity level 7 (i.e. face 7). On the other hand, acceptable emotion recognition for Disgust and Surprise emotions began from the intensity level 40 (i.e. face 40). Finally, the Fear emotion was recognized from intensity level 70 (i.e. face 70).

In the same vain, the Sadness emotion seemed to be recognized by the participants of the HF-ASD group in an acceptable way since the first intensity level (i.e. face 1). It was followed by the Joy emotion which was recognized from the intensity level 4 (i.e. face 4). A similar recognition was showed by the Anger and Disgust emotions, which started to be recognized since the intensity level 16 (i.e. face 16). Moreover, the Surprise emotion was acceptably recognized from the intensity level 40 (i.e. face 40). Finally, the Fear emotion was recognized only since the intensity level 70 (i.e. face 70). It is worth to note that both TD and HF-ASD groups presented similar results for Fear and Surprise emotions.

4. Conclusions

Gratifying results have been obtained through the VALE-Emotions App. This application was developed with the aim of stimulating and facilitating both teaching and evaluation activities of six basic emotions recognition with different intensity levels. It is worth to mention that the effectiveness of this App releases on the use of the emotions images sequences generated by the Dynamic Facial Expressions (DFE) parameters reported in this work. In fact, the effective evaluation of the recognition level of emotions from participants allowed for assessing two hypotheses proposed for this work. The first one assumed a high success emotions recognition of the HF-ASD participants, while the second one assumed that it is possible to determine the intensity level at which the 50% of participants successfully recognized emotions inside the DFE sequences. All the reported results confirmed the first hypothesis due to a high success recognition performed by the HF-ASD participants during the Test-1. In fact, from a general point of view, the average success of emotions recognition for the HF-ASD group was as higher as TD one. On the other hand, the second hypothesis was confirmed through the determination of the intensities levels at which an acceptable emotion recognition begun for each emotion. Furthermore, a final analysis of the response time of each participant for each group demonstrates that the HF-ASD participants were faster in the recognition of all the six basic emotions, when these are presented at the maximum intensity level, than their counterparts.