Introduction

Advances in computational design technologies have been influential for a long while (Asanowicz, 1989; Pittioni, 1992), and have become intense regarding the changes in curriculum over the last two decades (Oxman, 2008; Duarte et al., 2012; Varinlioglu et al., 2016; Benner & McArthur, 2019; Fricker et al., 2020). Diversification and proliferation of computational design tools, methods and processes have made it necessary to reconsider the issue of creativity in architecture education. Creativity concept is a core aspect in design studios as a vital part of architecture education (Casakin & Kreitler, 2008; Kalantari et al., 2020). Besides, it has been an important issue in the context of computational design (Brown, 2015; Brown, 2019). However, there are limited studies promoting creativity and focusing on creativity assessment in relation with computational design in architecture education.

The lack of a universally accepted definition for creativity and the difficulty of combining creativity and computational design has led to a subjective understanding of creativity (Doheim & Yusof, 2020; Kalantari et al., 2020). The comprehension of creativity differs among individuals in relation to factors such as level of expertise and personal motivation or tendencies. In this regard, this study aims to gain a better understanding of the potential gaps between students and experts regarding the assessment of creativity. It is considered that this study will contribute to both students and instructors in enhancing the common communication ground by uncovering the expectations around creativity. Rather than examining creativity through students’ processes and course outcomes, this study particularly concentrates on the way students and experts assess creativity. In this context, a teaching experiment conducted in a master level course, namely Digital Architectural Design and Modelling (DADM), was examined in detail.

The three research questions are as follows:

Through which concepts do students assess creativity?

What concepts do experts use to evaluate creativity?

Does any gap emerge between student and expert perspectives regarding the assessment of creativity?

The study is organized as follows. Section 2 presents an overview of research on assessment of creativity in the context of architecture education and computational design education in architecture. Section 3 starts with the introduction of the course, and continues with respondents, data collection and employed methods. Section 4 presents a comparative analysis of student peer review and expert review processes. Section 5 provides results in the form of responses to research questions, a comparison of the findings and outcomes of this study with relevant literature, and the contribution of the research to improving the syllabus of DADM.

Background

Creativity has been a key concept in education, and it has become significant as one of the crucial skills for the 21st century (Henriksen et al., 2016; Lucas & Spencer, 2017). In architecture education, the concept of creativity is defined as a slippery concept, nebulous, and ill-structured (Spendlove, 2008). Regarding the assessment of creativity, while some researchers support the idea that it is an innate skill (Feldhusen & Goh, 1995), other researchers treat creativity as an educable quality (Sternberg & Williams, 1996).

Technology as a field of new possibilities for invention (Barkow & Leibinger, 2012), is a key concept in the current architecture agenda. Digitalization in tools, methods and milieus takes creativity to another level. Zagalo and Branco (2015) define creativity, apart from the traditional skill-based creativity approaches, as being something that anyone who finds the right domain to express his/her inner ideas and exteriorize them can achieve. Benjamin (2012) underlines the duality between efficiency and creativity; while Van Berkel (2012) advocates the opinion that the concerns of utility and quality are intertwined in the contemporary digital context. Lee et al. (2015) approach creativity through novelty, usefulness, complexity, and aesthetics criteria and utilize the expert evaluation method. In the scope of the assessment of creativity, Lee et al. (2015) point out the differences among experts and underline the need for more explicit criteria or increasing the number of participants within the expert evaluation method.

Regarding the creativity in architectural education and computational design, the majority of the research on creativity is about what creativity is. From a different point of view, Csikszentmihalyi (1997) raises the question of "where is creativity?" rather than “what is creativity”, and emphasizes that creativity can only be achieved with the synergy of multiple layers which are defined as design procedures (Gero, 2000), developmental processes (Götz, 1981), systematic steps (Garvin, 1964), and creative bridge (Cross, 1997) in the creativity literature in architecture and design.

To be able to understand the creativity assessment process better, previous researchers have defined their own concepts. In 1937, Catherine Patrick offered the systematic stages of (i) preparation, (ii) incubation, (iii) insight, and (iv) verification of concretization. To improve the stages proposed by Patrick (1937), Götz (1981) added the stages of (v) product and (vi) complex process of evaluation (morality, usefulness, scientific accuracy, originality, beauty). As Fleith (2000) describes, Feldhusen and Goh (1995) conceptualize creativity through four categories: (i) person, (ii) product, (iii) process, and (iv) environment. Relatedly, Tardif and Sternberg (1988) focus on the three aspects of a creative person: (i) cognitive characteristics, (ii) personality and emotional qualities, and (iii) experiences during one's development. In another study, Botella et al. (2011) define nine stages of a creative process as preparation, concentration, incubation, ideation, insight, verification, planning, production and validation. Accordingly, Folch et al. (2019) offer a threefold process of creativity: (i) preparation, (ii) ideation, and (iii) verification & evaluation.

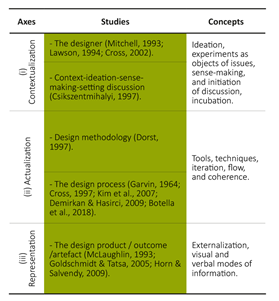

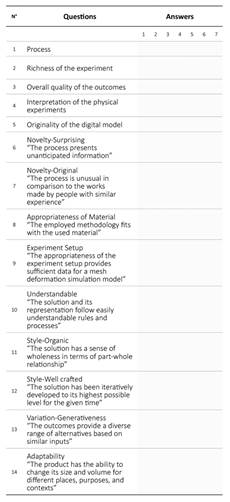

Considering the existing concepts proposed by the previous researches and Csikszentmihalyi’s (1997) above-mentioned cult question, this study proposes interrelated lenses of a creative process as (i) contextualization, (ii) actualization, and (iii) representation (CAR). The contextualization lens involves ideation, experiments as objects of issues, sense making, and initiation of discussion. The actualization lens refers to the research process that consists of tools, techniques, iteration, flow, and coherence. As for the representation lens, it corresponds to the externalization of design ideas including but not limited to visual and verbal modes of information (Table 1). To make each lens more transparent or explicit, this study defines a series of criteria as follows: process, richness of the experiment, overall quality of the outcomes, interpretation of the physical experiments, originality of the digital model, novelty-surprising, novelty-original, appropriateness of material, experiment setup, understandable, style-organic, style-well crafted, and variation-generativeness and adaptability.

Today’s wide range use of computational tools and approaches in architectural design processes makes it more difficult to investigate the source and assess creativity. Thus, this study assumes that creativity can be achieved through coalescence of the three lenses constituting a whole creative process.

Methods

This study employs quantitative and qualitative research methods sequentially. As the students’ submissions for DADM were used as assessment material, the following subsection first introduces the course (DADM) with its teaching methodology, goals, duration, type of communication, assignments, and outcomes. Then, the respondents, data collection procedures and employed methods are presented in detail.

The Course: DADM

As one of the five compulsory courses of Architectural Design Computing graduate program in Istanbul Technical University, DADM particularly focuses on supporting architecture students' abilities to gain and use the theoretical and practical knowledge acquired in computational design in architecture based on the undergraduate level competencies.

The teaching method of DADM consists of lectures given by instructors and invited guests, readings and generation of reflection diagrams, hands-on exercises, physical experiments, in-class workshops, student presentations, group discussions, and jury evaluations. The goals of the DADM are as follows:

To have a basic understanding of current parametric and algorithmic design approaches, as well as insights on the future directions of digital design and modelling.

To gain a critical awareness of computational design methods in architecture beyond merely using computers as representation tools.

To gain experience in developing novel strategies to emergent situations that may arise in the digital design and modeling process.

To gain a critical perspective on assessing processes and products of the digital milieu.

The duration of a DADM in-person education period is 14 weeks of 3 hours per week, plus a final presentation session. According to The European Credit Transfer and Accumulation System (ECTS), the ECTS of DADM is 7.5, which approximately equals 187.5 hours of study in a semester. The course material, the reading list, and the hands-on exercises are updated each semester depending on the selected theme.

In the 2020-2021 fall semester, DADM was executed remotely through the Zoom platform. For the group discussions and some of the in-class exercises, the breakout room option of Zoom was used. The course materials were shared in both Google Drive and the online submission system provided by the university. Moreover, students have an opportunity to revisit the video records of the course. Specifically, peer review and expert review modules were added to DADM in the 2020-2021 fall semester to encourage student’s assessment skills.

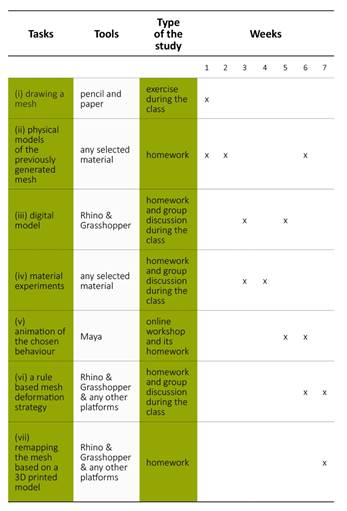

The theme of the 2020-2021 fall semester, an assignment named deMesh-deMap, was introduced to students at the beginning of the semester as a rule-based mesh deformation strategy. Apart from the semester-long assignment, a series of weekly tasks were given. The tasks, tools, type of study, and their durations are detailed in Table 2.

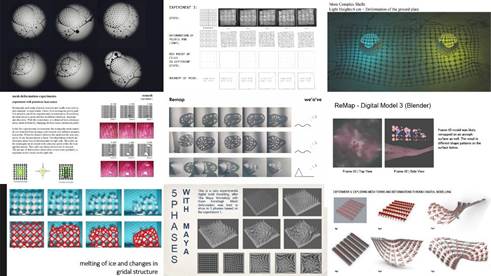

Students were expected to develop experiment setups to observe tectonic characteristics in the change of physical and chemical processes, extract rules, relations and variables from the observed experiments, and explore the dynamic modes of mesh geometry. The students were asked to demonstrate the experience they gained throughout the semester in a booklet. The booklets were submitted in the 2020-2021 fall semester within the scope of the DADM course, including all tasks and assignments supporting the midterm submission. The midterm assignments in an individual booklet format (PDF) were used as a source of evaluation material (Figure 1).

Respondents, Data Collection and Employed Methods

The data collection process was conducted online with two independent groups of respondents qualified as students and experts. The first group of respondents were 21 students from Istanbul Technical University who participated in DADM in the 2020-2021 fall semester. Among 21 students, 11 were female and 10 were male. The age range was between 22 and 28 years, where the mean value was 24. The distribution of programs, degrees of education and number of respondents are shown below:

● Material Science and Engineering Students - Master Level - 2

● Architecture Design Computing Students - PhD Level - 4

● Architecture Design Student - PhD Level - 1

● Architecture Student - Undergraduate Level - 4

● Architecture Design Computing Students - Master Level - 9

● Game and Interaction Technologies Student - Master Level - 1

The diversity of the participants in terms of digital modelling skills, level of education and the programs they had enrolled in are considered as a positive impact on the reliability of the collected data.

The second group of respondents were faculty members from architecture departments of different universities: Associate Professors, Assistant Professors, PhD degree holders, PhD candidates, and Practicing Architects holding Master Degrees. The number of experts were 18, and the age range was between 30 and 40 years old, where the mean value was 36. Both groups of respondents were informed beforehand that their personal information would be kept anonymous and it was ensured that the participants did not see each other’s responses.

The type of collected data consists of verbal explanations (qualitative data) and an online questionnaire (quantitative data). First, respondents were asked to make a critical evaluation in the context of creativity through an online form with 100-200 words for 2 projects out of 11. The final submission, including the diagrams and the detailed documentation of the projects regarding the whole process, was shared online. The data collected in this phase were used in the qualitative analysis. Words, phrases or sentences were labeled semantically by the authors in relation to CAR lenses. To be able to perform a systematic analysis from the text data, a conceptual approach was required. Further to a comprehensive literature review on creativity, the three-fold lenses of contextualization, actualization and representation (CAR) were proposed. The text data were parsed into segments (concepts) through a semantic evaluation done by the authors and these concepts were matched with the related CAR lens. To investigate students’ and experts’ perspectives on creativity assessment through CAR lenses, an independent chi-square test was implemented. In this context, each CAR lens revealed from the qualitative analysis might contain one or more criteria of the questionnaire.

In addition to the qualitative phase, an online questionnaire form based on a 7-point Likert scale was also employed for the data collection phase of the quantitative research. The online questionnaire form focuses on the evaluation of the overall study (1 to 5), process (6 to 9), and product (10 to 14) respectively. The questionnaire form is given in Table 3.

The defined scale items for the questions are: none (1), not at all (2), maybe (3), ok (4), good (5), great (6), and awesome (7) in the 7-point Likert scale. The questionnaire results were evaluated utilizing Statistical Package for Social Sciences (SPSS) software. A normality test and a two-sample t-test were implemented respectively. Relating qualitative and quantitative research facilitates a more systematic evaluation of creativity assessment.

The main reason for the order of the above-mentioned steps is to avoid influencing participants' vocabulary or presenting any predefined structure for the process. The students were asked to answer the open-ended question for up to 60 minutes during the course hours. Breakout rooms of Zoom were set up during the evaluation process. However, the experts participated in the review process asynchronously without a time restriction. The responses from all participants were collected via separated text files in Google Docs.

Results

Results of Quantitative Research

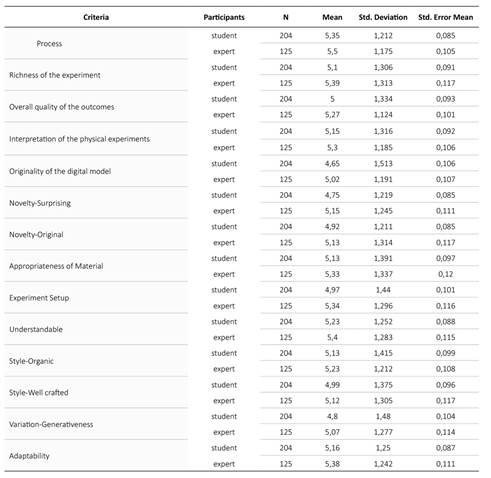

The questionnaire results were obtained, evaluating each question separately. Before deciding on the type of quantitative research, a normality test was conducted. The normality test was based on Kolmogorov-Smirnov reference, and values of skewness and kurtosis were based on Tabachnick and Fidell (2014). In this study, the results of the normality test demonstrated normal distribution. Therefore, as seen from Table 4 and Table 5, two-sample t-test was implemented to compare the responses of the students and the experts as two independent variables. Cases where there was no significant difference between the two variables were designated as a null hypothesis. Otherwise, the null hypothesis was rejected. In the scope of this research, only cases determining a significant difference will be discussed.

According to the collected data, it can be seen that the difference between the responses of the students and the experts is not significant for the criteria such as measures of process, overall quality of the outcomes, interpretation of the physical experiments, novelty-original, appropriateness of material, understandable, style-organic, style-well crafted, variation-generativeness and adaptability. However, the scores of the experts are significantly higher than the students’ for criteria such as measures of richness (meanexpert= 5.39, sd=1.313), (meanstudent=5.1, sd=1.306); originality of the digital model (meanexpert= 5.02, sd= 1.191), (meanstudent= 4.65, sd= 1.513); novelty-surprising (meanexpert= 5.15, sd=1.245), (meanstudent= 4.75, sd= 1.219); and experiment setup (meanexpert=5.34, sd=1.296), (meanstudent=4.97, sd=1.44). Regarding the two-sample t-test, these results indicate a meaningful difference between the two groups of respondents for the measures of richness of the experiment, originality of the digital model, novelty-surprising, and experiment setup.

Results of Qualitative Research

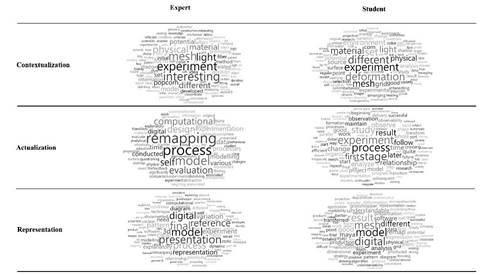

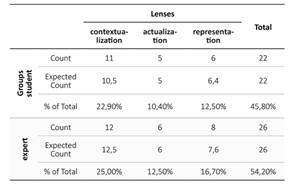

To amplify the investigation, verbal evaluations (100-200 words) of the two groups of respondents were compared through the instrument of the chi-square test to see if CAR lenses have a relationship with being a student or an expert. Departing from the result of the chi-square test, the gap and relations between two groups of respondents were discussed in the context of CAR lenses.

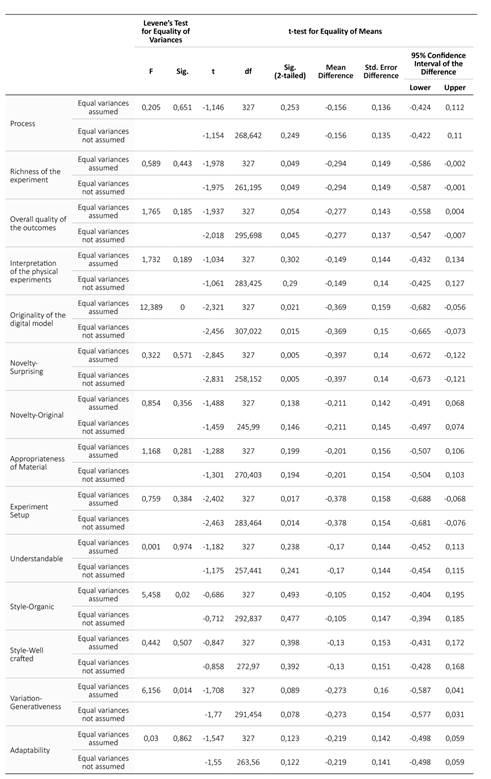

The qualitative data comprised 4531 words (sum of all verbal evaluations), and were analyzed in two separate clusters as students and experts. In the next step, following a semantic evaluation by the authors, the expressions (concepts) extracted from the text were matched with the related CAR lens (Figure 2).

For example, the expressions including “experiment” were related with contextualization and representation by considering their meaning within the sentences. Accordingly, the concept of actualization is approached in terms of carrying out, materializing the ideas, and strategies, as well as corresponding to “process”. The verbal expressions were also evaluated depending on how each participant discussed and criticized the content holistically. Based on the results of semantic evaluation, the most associated lens for each evaluation was counted as an input (as 1 for the “count” lines) for Table 6. Using this count, the chi-square test was implemented in SPSS.

The chi-square test indicated that the creativity assessments of the two groups of respondents are statistically independent from each other (x2(2) =0,087, p>.05). A quantitative proximity was observed between the creativity assessment of the two groups through CAR lenses. However, while expanding on the qualitative analysis, it was observed that the way the two groups of respondents evaluate the student works through CAR lenses was different. This fact corroborates the gap defined as a problem in the Introduction.

Findings

Creativity assessments by the two independent groups were compared using both quantitative and qualitative research methods. Further to quantitative results, qualitative research provided a deeper understanding about the nature of the differences.

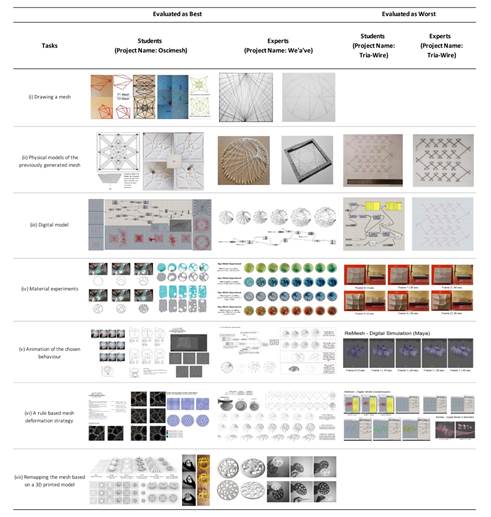

Based on the results derived from the questionnaire, student evaluations led to one best and one weak project. However, expert assessments resulted with varying best and weak projects for each evaluation criteria (Figure 3). In other words, while a block-like pattern was seen in student assessments, diverging patterns were seen in expert evaluations. Moreover, it was observed that the students elaborate the assignment as a linear and one-way process (i.e. analyze, results, final, stage, first, and later). On the other hand, a non-linear and holistic perspective was followed in the assessments of the experts (i.e. self-evaluation, questioning the feedback, overlap, and superposition).

Source: Authors (2022)

Figure 3: Students’ works and evaluation results of the two groups of respondents

Regarding the results of the qualitative analysis, to define the concept of “richness”, terms such as “digital and physical models”, “experiment”, “product”, “output”, and “representation” were widely used by the students. On the other hand, experts focused on the context of the experiment setup and in-depth investigations related to the “richness” and “context”. Regarding the concept of “originality”, it was observed that students tend to evaluate originality in comparison with other in-class projects while experts evaluated each project with a wide range of literature. Moreover, student evaluations involved specific comments on the steps of the given tasks while expert evaluations mostly focused on the overall methodology, process, and approach. Considering the concept of “novelty”, the term “interesting” became a common denominator between the two groups of respondents. Students used terms such as “promising”, “inspiring” and “new” in relation to physical models and outputs of the experiments. However, experts mostly used “potential” and “exciting” to evaluate novelty in relation to the general context. Students evaluated the “experiment setup” mostly in the context of contextualization and representation lenses. Unlike students, experts looked at the “experiment setup” through the lens of actualization, and considered it as a part of the process (i.e. superpositioned, transition, and during the experimentation).

When the qualitative analysis was considered based on CAR lenses, the contextualization was regarded as a primary lens in both groups’ assessments. However, the students mainly approached the concept of contextualization through experiment materials, components, software, and variety of tools while the experts elaborated it through the concepts of context, strategy, and point of origin by means of how the materials, software, and tools were designed. The students elaborated the actualization lens through association with the richness category while the experts considered actualization among the measures of richness of the experiment, originality of the digital model, novelty-surprising, and experiment setup. Accordingly, the students considered the representation lens in relation to output (i.e. model, report, product and result), while the experts concentrated on documentation of the whole process, connections, patterns, and flow.

Discussion

Similar to its vague meaning, both teaching and assessment of creativity have an ambiguous nature. What was defined as novel and creative in the past has become common and standard with the dominance of digital tools in design processes. The unique design solutions of the past which were generally accepted as creative are becoming products of the recent digital tools, methods, and environments. Relatedly, when a new computational design method or technique is introduced to architecture students, the final products may develop a certain homogeneity due to the dominance of the employed tools. One of the points that makes it difficult to discuss creativity in the age of computational design is the cyclical nature of design processes and the procedural structure of use of computational methods. As a result, it becomes more difficult for students, instructors, and experts to evaluate creativity of digitally generated design solutions. To gain a better vision of the differences between student and expert in the way they assess creativity, a hybrid methodology on the basis of qualitative and quantitative research techniques was employed.

This study answers three research questions. The first and second research questions seek to identify the concepts used by the two respondent groups to assess creativity. The diagram in Figure 2 illustrates and enables a ground for interpretation to answer this question. The sizes of the mapped concepts vary according to their frequency of use in the written material. Certain concepts, such as experiment, digital, process, model, material, and mesh, can be easily derived from the responses of both groups. However, there are several concepts that are more dominant for the experts, such as interesting, remapping, presentation, reference, computational, and deformation, stage, result, and deformation for the students.

For the third research question that seeks significant differences and gaps between the two groups regarding creativity assessment, the following conclusions were obtained:

While experts assess creativity with a panoramic overview by means of context, meaning, depth of ideation, process, and outcomes, the students majorly focus on specific details.

In cases where both groups of respondents consider similar criteria, the ways they conceptualize and evaluate creativity differ.

The experts approached the design process as a non-linear, holistic concept, while students regarded the process as sequences of steps.

To deepen the discussion, the obtained results are compared with related and recent studies. Onsman (2016) discusses creativity as an alternative to expertise focusing both on process and product specifically in the context of design studio. Ways of developing determination of assessment criteria are investigated based on scoring rubric method, specifically through learning outcomes. Folch et al. (2019) aim at researching the creative process in architecture students and professionals utilizing grounded theory. Folch et al. (2019) highlight the impact of talking, and dialogical creative process is recommended to bring creativity from tacit to a more explicit process. In a recent study, Doheim and Yusof (2020) investigate the differences in perception of creativity in search of a level of agreement between two sample groups which are students and instructors. Semi-structured interviews and a pre-designed questionnaire are utilized in the context of a specific architectural design studio with aims to promote creativity within the design studio. The results show that there are more disagreements than agreements between students and instructors, and there is more consensus among the instructors’ responses than those of the students. Another result underlines the relation between the creativity perception in a specific studio and the objectives of that studio.

While the mentioned studies have similarities with our research at certain points, such as the aim of promoting creativity in architecture education, questioning the process of creativity assessment, and conducting comparative research among different respondent groups, there are also distinctive points that reveal the original aspect of our study:

First, the majority of the relevant studies are conducted in the context of design studios. Our study, with an assumption of considering assessment as a part of the learning process, brings the discussion on creativity assessment to the field of computational design education in architecture where creativity has become crucial.

Second, this study focuses on the gap between students and experts, and approaches the gap constructively in search of ways of nourishing the design process, rather than developing an assessment method or proposing a creativity definition.

Third, this study combines qualitative and quantitative research methods to reveal the criteria that create the mentioned gap, and to investigate the nature and the meaning of the gap itself.

The findings of the study have also contributed to improving the course syllabus studied. Peer review sessions have supported students in gaining critical thinking skills. It is concluded that the practice of creativity assessment via peer review is a constructive factor in developing creativity skills of students. Peer review has been added as a new support tool to the pedagogical approach that encourages the learner to take risks, self-criticize, and experiment. For instance, new criteria observed in the students’ detailed responses can nurture new design directions and evaluation criteria for DADM in the following semesters.

One semester-long teaching experiment provided a basis for this study. The research might be extended with the participation of more students and experts in future studies. To obtain comprehensive inferences, more criteria such as educational background, academic social networks, and research fields of experts might be considered.