Suggested citation:

Luna, M.; Moya, J.; Aguilar, W.; Abad, V. (2017). «Mobile robot with vision based navigation and pedestrian detection ». Ingenius. N°. 17, (Enero-Junio). pp. 67-72. ISSN: 1390-650X.

1. Introduction

Autonomous navigation is a research line with growing interest from DARPA Grand Challenge 2004. Several vision based vehicles have been developed [1–3]. There are a lot of challenges in urban navigation like perception [4, 5], obstacles avoidance [6, 7], people detection [8–11] and video stabilization [12–14].

Multiple pedestrian detection algorithms have been developed due their different applications that include: surveillance [15–17], driver assistance systems [11, 18, 19], robotics [20, 21], and others. According to [22] most approaches use detectors based on HAARlike features [23], HOG [24, 25], LBP [26] and some combinations like: HOG-HAAR [27] or HOG-LBP [28]. In [29], HAAR-like features have a low performance in pedestrian detection task.

In this work, we experimentally test Histogram of Oriented Gradients (HOG) [24] and Local Binary Patterns (LBP) [26], feature extraction algorithms for pedestrian detection with Adaptive Boosting (AdaBoost), a supervised learning algorithm, applied in mobile robot navigation. The video is transmitted via Wi-Fi to a ground station to determine the motion direction of the robot using image processing, and the control actions are sent by Bluetooth from the computer to the robot.

The rest of the article is distributed as follows: In the next section, we do a review of the related work. In the section III, we make a review of two algorithms used for pedestrian detection. We describe our approach for robot development in the section IV. Finally the results obtained and the conclusions are presented in the sections V and VI.

2. Related Works

In the literature, there are several applications that integrate people tracking with mobile robots. A ground autonomous vehicle designed to track people based on a vision was proposed in [30], in this work the person to be tracked should wear a discriminable rectangle.

In [20], a mobile platform that uses a multiple sensor fusion approach and combines three kinds of sensors in order to detect people using RGB-D vision, lasers and a thermal sensor is presented. The mobile robot in [21] uses an omnidirectional camera and laser range finder to detect and track people. In [31] is introduced a robot to assist elderly people with a Kinect device and ROS packages. The detection and localization of people is an important aspect in robotic applications for interaction. The work presented in [32] deals with the task of searching for people in home environments with a mobile robot. The method uses color and gradient models of the environment and a color model of the user. Evaluation is done on real-world experiments with the robot searching for the user at different places.

We propose a simple structure robot (equipped only with a smartphone camera for navigation) capable of detect and track people orientation and translation based on pedestrian detection algorithms. In contrast with some words presented in the literature, our robot performs the navigation task using a PI control to achieve relatively fast speed, avoiding braking issues.

3. Pedestrian Detection Algorithms

3.1. Histogram of Oriented Gradients (HOG)

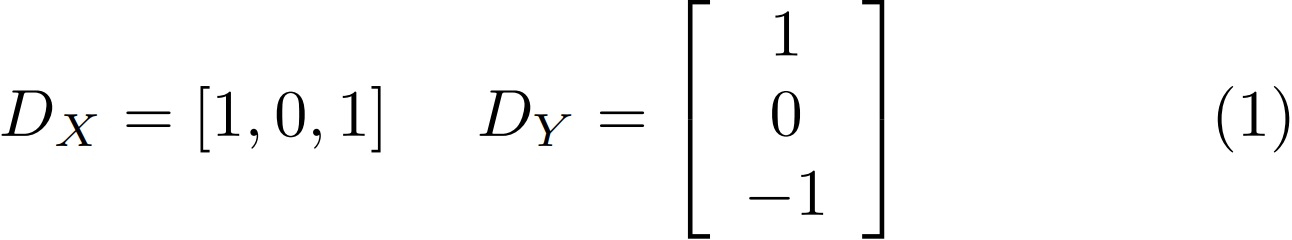

This algorithm is a feature descriptor for object detection focused on pedestrian detection and introduced in [24]. The image window is separated into smaller parts called cells. For each cell, we accumulate a local 1-D histogram of gradient orientations of the pixels in the cell. The gradient orientation is given by equations 1 and 1:

Where: I is the image and Ix, Iy are the derivatives of the image in x,y.

Each cell is discretized into angular bins according to the gradient orientation and each pixel of the cell contributes with a gradient weight to its corresponding angular bin. The adjacent cells are grouped in special regions called blocks and the normalized group of histograms represents the block histogram. Finally, the set of these block histograms represents the descriptor.

3.2. Local Binary Patterns (LBP)

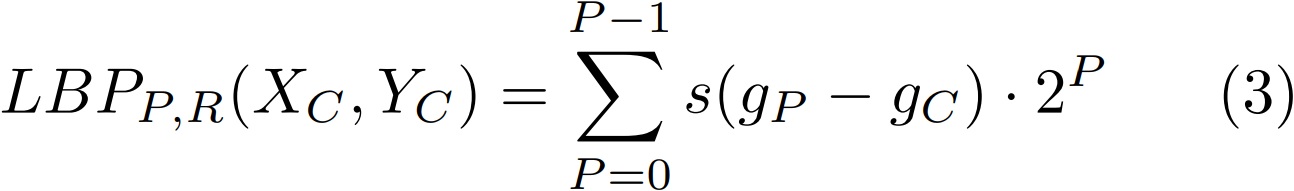

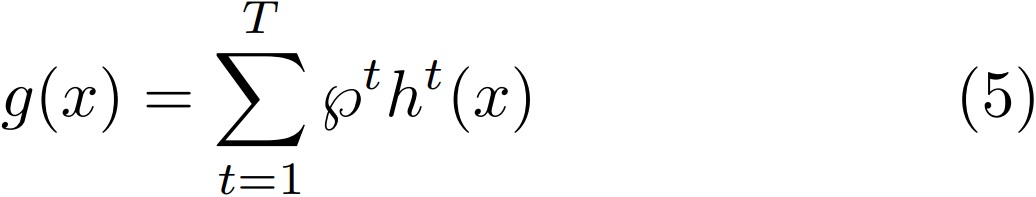

This algorithm was presented like a texture descriptor for object detection. This compares a central pixel with the neighbors. The central pixel value is taken as threshold and a value of “1” is assigned if the neighbor is greater or equal to the central pixel, otherwise the value is “0”. In each pixel we set a weight of 2n according to the position respect to the central pixel [26]. The parameters of LBP operator are R and P, where R is the distance to the central pixel and P is the number of pixels [14]. LBP is defined mathematically as:

In the equation , gP is the value of the central pixel, gC the value of the neighbors and 2P is the weight stablished for each operation. And s(gP −gC) is given by:

3.3. Adaboost

Adaboost is a machine learning algorithm [33] that initially keeps uniform distribution of weights in each training sample. In the first iteration the algorithm trains a weak classifier using a feature extraction methods or mix of them achieving a higher recognition performance for the training samples.

In the second iteration, the training samples, misclassified by the first weak classifier, receive higher weights.

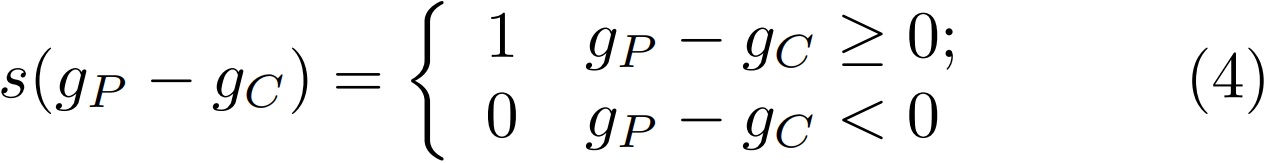

The new selected feature extraction methods should be focused in these misclassified samples. The final result is a cascade of linear combinations with selected weak classifiers:

4. Our Approach

4.1. Mobile Robotic Platform

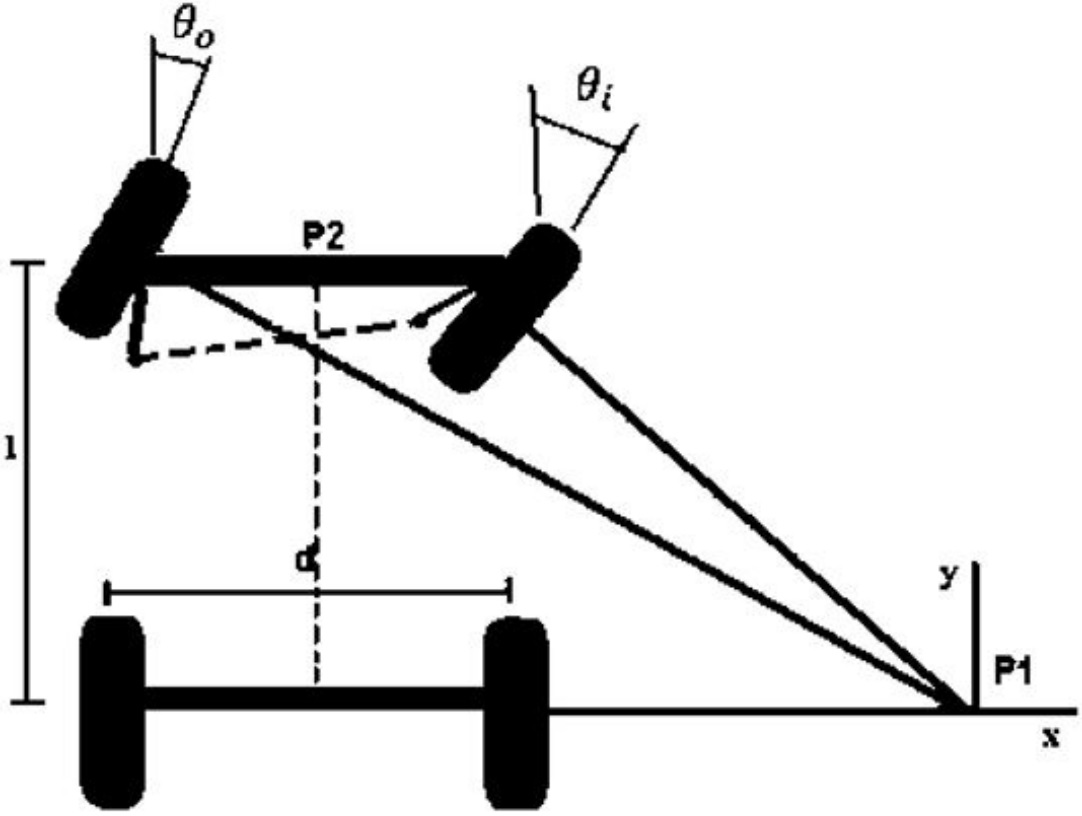

We are using the Ackerman steering configuration for the robot [19], i.e. two wheels with rear haulage and two with front direction presented in the Fig. 1. The system is designed so that the interior front wheel in a gyre has an angle slightly sharper than the exterior, in order to avoid skidding; the normal wheels intersect at a point on extension of the axis of the rear wheels and make the toolpath for constant rotation angles.

The relationship between the angles of the wheels of direction is in equation 6:

where:

For traction and steering control the system use Pulse Width Modulator (PWM) with Chopper configuration [34]. Additionally, there is a smart device (smartphone) camera for navigation.

The robot has two motors, one for forward/reverse displacement and other that controls direction. An H bridges circuit for motor control is implemented. The camera of smartphone is used as IP camera for image capture.

4.2. Pedestrian Detection

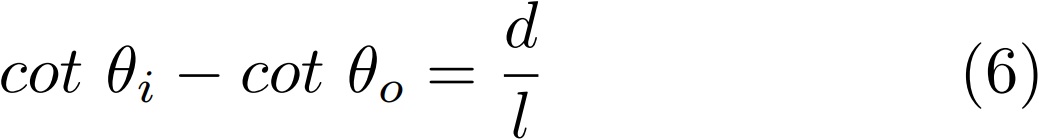

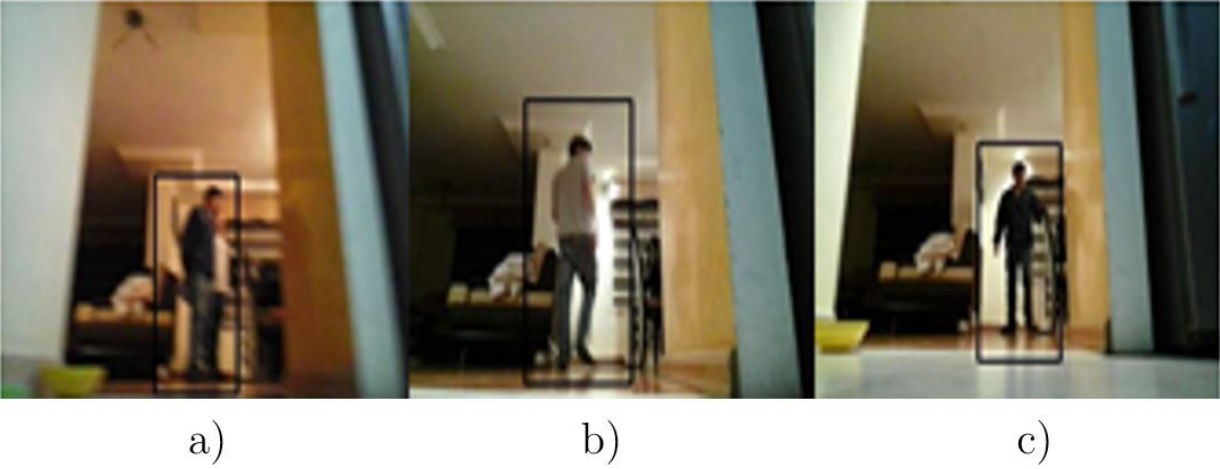

The feature extraction algorithms previously described are applied on the mobile robot to obtain an autonomous navigation system based on pedestrian tracking. We use Adaboost as machine learning method. It is an algorithm in machine learning based on the notion of creating a highly accurate prediction rule by combining many relatively weak and inaccurate rules [33]. Fig. 2 shows images of the HOG algorithm performance in the mobile robot.

Figure 2 Pedestrian detection with HOG algorithm. (a) Side detection of a person. (b) Back detection of a person. (c) Frontal Detection of a person.

Performance of LBP algorithm in images captured from the mobile robot is presented on Fig. 3.

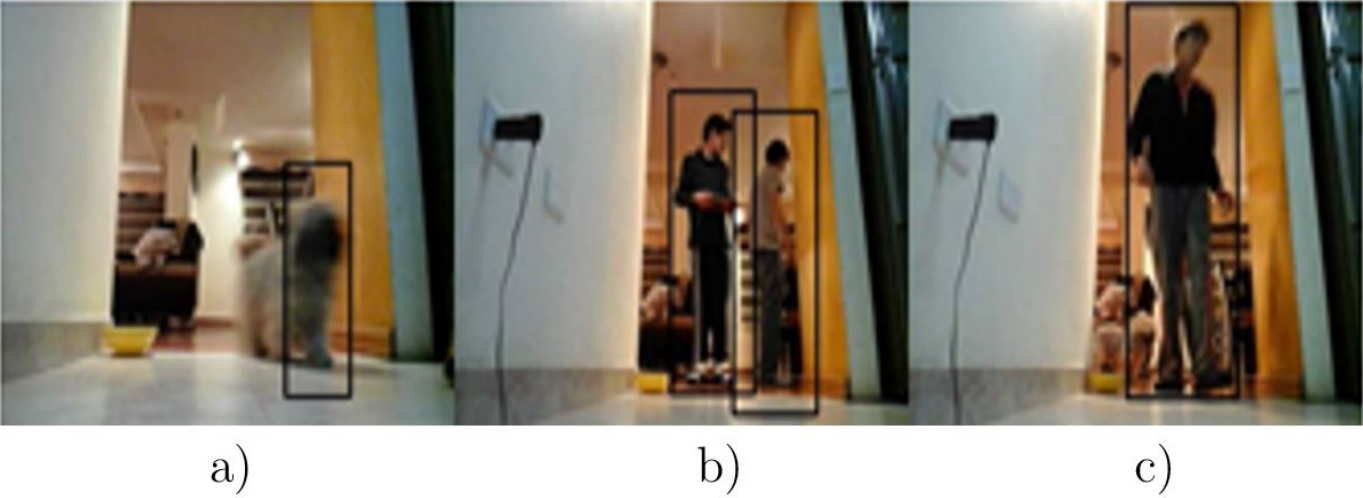

4.3. Controller

The mobile robot uses two main inputs for the controller (needed for 2D navigation [35]): horizontal position and distance obtained from the bounding box of the pedestrian detection. Horizontal position depends of x coordinate of the bounding box centroid and the distance depends of the width of the bounding box. We use PI control for the distance that reduces overshoot in order to have a soft controller. For horizontal position we use an on-off controller with hysteresis, i.e. with a band of maximum and minimum va-lues in the output due to the variation range of the actuators is small and precision is not necessary (Fig. 4).

4.4. Communication

Wi-Fi communication is implemented for transmitting data from the IP camera to the computer. This is because Wi-Fi is able to send image data. In the other side we use Bluetooth communication to send control command from the computer to the robot. The transmission speed obtained from experimentation is 115200 baud. (See section 5).

5. Experimentation and Results

5.1. Pedestrian Detection

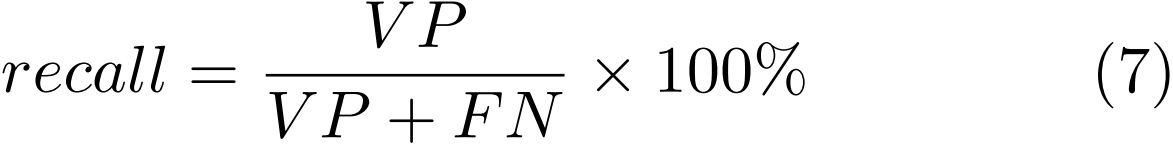

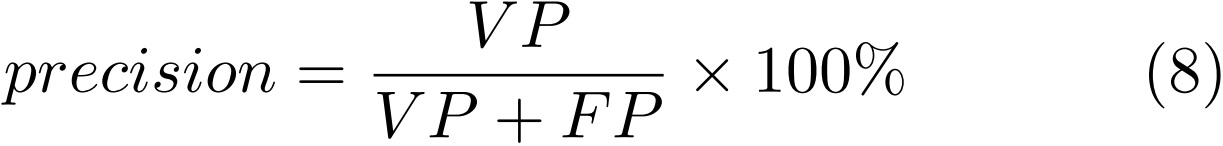

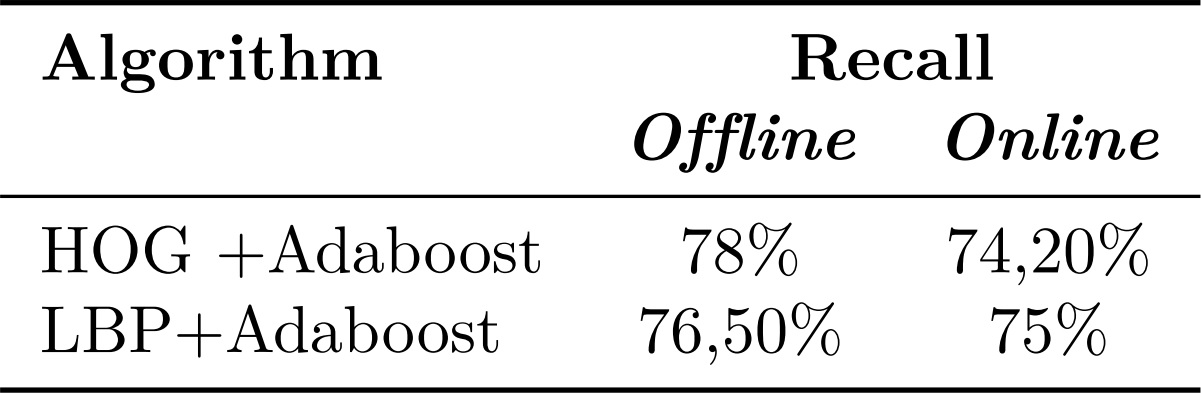

The performance of pedestrian detection algorithms was compared using online and offline videos. We are using recall and precision as metrics of evaluation. The formulas used are presented in equations 7 and 8 respectively.

where:

TP: true positives.

FN: false negative.

FP: false positives.

We realized two experiments, offline and online. For the online test, we used four different security cameras videos obtained from Internet. We captured 1000 frames separated in groups of 30 frames. For each group, we determined true positives, true negatives, false positives and false negatives using HOG and LBP algorithms. The image processing was realized with a 2.4 GHz processor and RAM memory of 4 GB. In the online test we recorded 7 minutes of video, i.e. 12600 frames, and the same procedure in offline test was performed. The results are presented in Table 1 and Table 2.

The Table 1 presents the results of recall of the detectors tested offline and online.

The HOG precision test is better than LBP because LBP performance give more false positives in both cases (offline and online). The LBP algorithm compares image textures and confuses other objects with persons easily. HOG is a descriptor based on objects shape and focused principally on pedestrians [24], thus it has better performance but with higher computational cost. Based on the results, we choose HOG because the computational cost problem can be reduced using a processor with better characteristics.

5.2. Controller response and communication

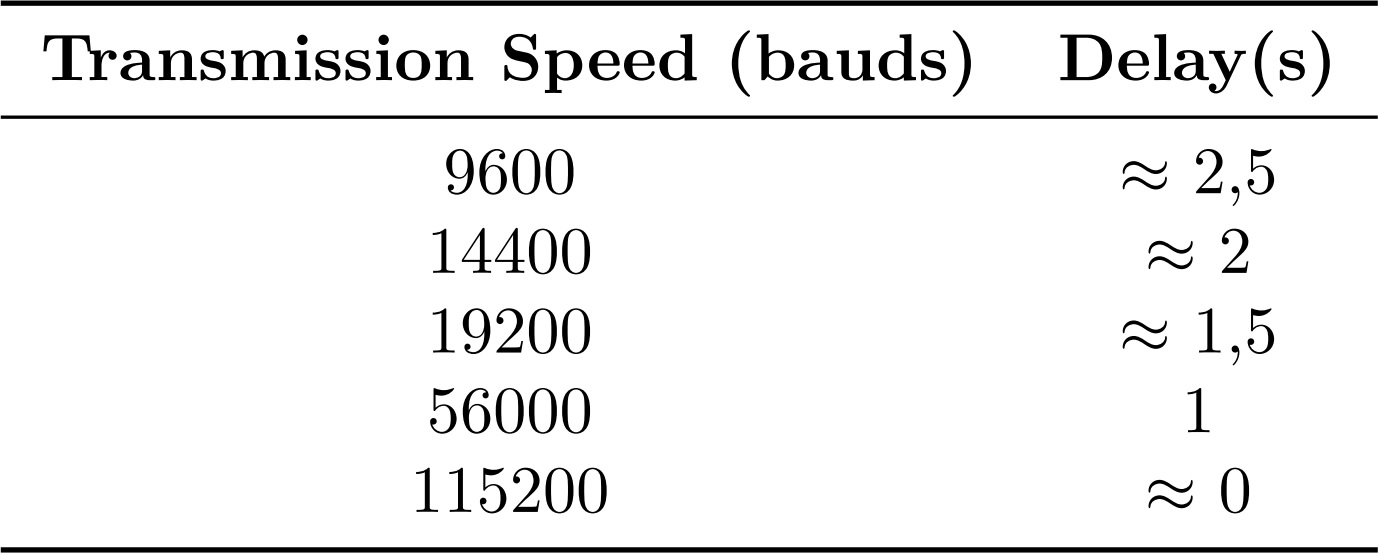

During implementation of the robot, it was found that the response time of the controller varied according to Bluetooth transmission speed, the results of the test are presented in Table 3.

It should be noted that the delays were measured according to the perceived response of the controller. A transmission rate of 115200 baud was chosen based on results.

6. Conclusions

The HOG algorithm has better performance off-line; however, the implementation represents a higher computational cost. LBP had a lower performance offline but was not affected for online experiments. The false positive detection rate involves low precision.

Classical method of PI control provided a smooth braking control to keep a safe distance between the mobile robot and the pedestrian. On-Off control for the horizontal position is a simple controller that had a good performance maintaining the direction of the navigation.

A slow controller response endangered the safety of pedestrians, so it was necessary to increase the transmission speed obtaining better results. The response time also was affected by the parameters of the PI controller. People tracking algorithms have benefits in several applications. We can improve the algorithms for pedestrian detection, based on obtained results, with some techniques like specialized training or video treating, but this involves an increased computational cost.