I. INTRODUCTION

THE growing demand for network services, applications, and resources from end-users has created limitations in the capacity of service providers to meet these needs due to a shortage of necessary hardware resources to scale proportionally to the demands. Service providers have had to adopt new technologies to meet current demands, maximize resource efficiency, and offer end-users high-quality service (QoS). In this context, virtualization technologies plays a fundamental role in the information technology industry. While virtualization technologies such as Virtual Machines (VMs) provide virtualized services, they present significant performance and resource efficiency problems. For this reason, container-based virtualization technologies have become the preferred choice, as they offer highly efficient virtualized services by operating directly on a device’s native software infrastructure, leveraging the features of an operating system kernel to create virtualization. These features include ‘namespaces’ and ‘cgroups’, which provide an isolated and independent environment within the native infrastructure in which they run(1).

This paper aims to describe, implement, and analyze a solution for network services based on Docker containers. Is analyzed the performance of containerized services in environments with limited CPU, memory, and storage resources, such as Raspberry Pi development boards. To achieve this, the work is structured as follows:

Section II: A brief description of concepts and related work on virtualization technologies, virtual machines, Docker containers, network services, and microservices is provided.

Section III: Describes the methodology, test environment, software tools, and hardware used for designing and implementing network services using Docker containers.

Section IV: are presented the results obtained in the implementation, and evaluated the performance of the implemented containerization system based on CPU usage, memory usage, and load.

Section V: Provides concluding remarks about the work developed.

II. BACKGROUND AND RELATED WORK

This section presents the fundamental concepts of virtualization architectures based on Virtual Machines (VMs), followed by container-based virtualization, emphasizing Docker technology. Additionally, we will provide a brief description of microservices infrastructure.

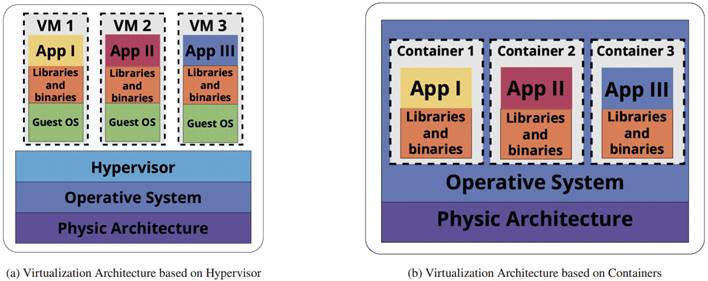

Virtualization is a process or technology that allows the segmentation of software and hardware resources from a physical architecture to deploy multiple dedicated resources in virtual environments for processes or applications. The main virtualization technologies include those based on hypervisors and container-based technologies. Hypervisor-based technology adds a layer of software to the conventional computational architecture, specifically to the underlying operating system, also known as the hypervisor. This layer virtualizes and manages the system’s physical resources, such as CPU, RAM, and storage, distributing them optimally for each Virtual Machine (VM). Thus, the hypervisor creates an isolated environment for each VM with its complete operating system, allowing independent execution from the main operating system(2), However, this hypervisor-based approach may decrease overall performance, especially in environments with high resource demand. It is important to note that this hypervisor layer is present in all traditional virtualization technologies, meaning its negative impact on performance is constant in any implementation case, as mentioned(3).

On the other hand, containerization technologies are software that represents a virtualization operating at the kernel level of the operating system. They enable the execution of applications and services in isolated and portable environments within the same physical system, called containers(2), Additionally, using namespaces and cgroups, this technology allows the isolation and resource management for each container(4).

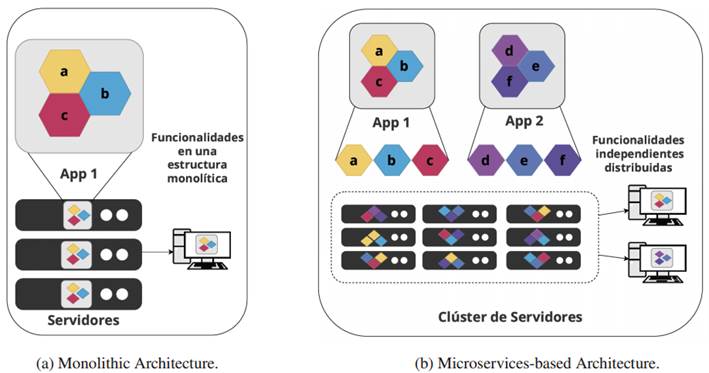

In practice, container processes outperform hypervisorbased solutions by eliminating the virtualization layer and operating directly on the host system’s kernel, as shown inFigure 1 (2), In addition to this advantage, containers have inherent characteristics derived from underlying technologies, such as autonomy and independence between containers in resource usage and deployment. Portability is also noteworthy, as containers use lightweight application images, usually in the order of MBs, compared to the GBs images of VMs that include a complete operating system. These features enable services to achieve high scalability and easy migration. Furthermore, they ensure high service availability by allowing the execution of multiple instances of the same application to maintain uninterrupted service, even if one or more containers of the same image stop working(1),(3),(5). These advantages are enhanced when combined with a microservices- based architecture, which defines a software design model with functions of a service distributed through autonomous and independent modules (Figure 2b), unlike the traditional architecture of monolithic applications, where functions are integrated into a single structure and are interdependent (Figure 2a)(6). Container characteristics allow leveraging this type of model to optimize service deployment, unlike those based on VMs.

On the other hand, containers also have disadvantages compared to VMs. For example, although containers are independent and isolated entities, they do not provide complete isolation with the operating system where they share kernel resources, as in the case of VMs. This, in turn, poses a security issue, as any impact at the operating system level can affect containers, as indicated in previous lines(7),(8),(9).

In environments with limited hardware resources, such as the one in this study, a container-based approach leverages these characteristics for optimal and scalable service deployment. In this case, security is not a parameter of study, this work focuses exclusively on measuring resource usage.

Docker is an open-source containerization technology that creates, runs, and manages fightweight, portable, and self- sufficient containers. These features have established Docker as a leader in the containerization technology market(10). Docker implements its architecture on the operating system kernel to achieve container deployment with these characteristics, utilizing namespaces and cgroups to isolate and manage container resources. Docker also uses a file system known as the ‘Advanced Union File System’ (AUFS) for layer based image construction, contributing to storage resource optimization during Docker image creation(11).

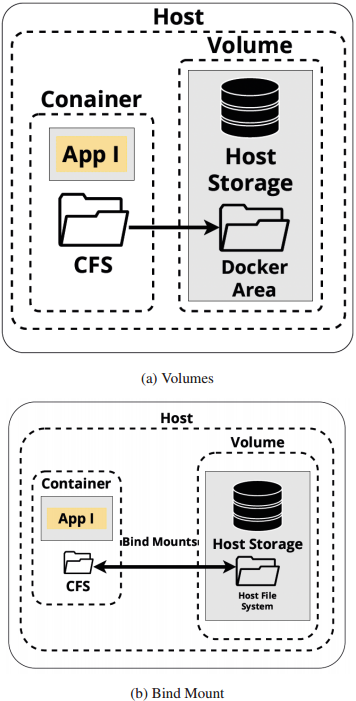

Above the underlying technologies, there is Docker’s architecture known as ‘Docker engine’, which is the specific name for the containerization technology developed by Docker. This architecture comprises ‘Docker objects’, representing functionalities within the Docker environment. These functionalities relate to storage, networking, container images, and containers. These elements can have different types depending on their utility and characteristics. Docker objects related to storage functionality are called ‘Docker volumes’. These are storage mechanisms created from directories or files stored on the host. Once created, directories are mounted into the container to access a file system outside the isolated environment, as shown inFigure 3a (12).

On the other hand, storage mechanisms are similar to volumes known as ‘bind mounts’. Unlike volumes, these are not managed by Docker. They allow data to be mounted to a specific folder on the host into a container, and the stored data persists beyond the container’s lifecycle, as illustrated inFigure 3b (13). ‘Volumes’ and ‘bind mounts’ can be used by multiple containers to share the same storage space. Additionally, these mechanisms can be employed for migrating data from containerized services, considering their functionality and ability to maintain data persistence between containers and over time.

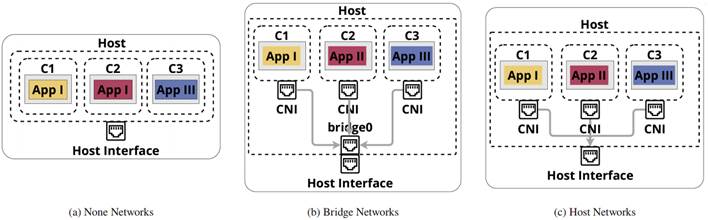

Regarding network functionalities, Docker offers what are known as ‘Docker networks’, entities responsible for providing basic network functionalities through ‘network drivers’, which share the same name as the network they manage(14). Docker provides three default networks:

None: This Docker network has no network interface outside the container. It only has a connection between the container and the loopback interface, and it is commonly used for offline testing, as illustrated inFigure 4a (15).

Bridge: The ‘bridge’ network is Docker’s default network and uses Linux’s bridge functionality to allow communication between containers. Docker creates virtual connections between containers and the virtual network interface called ‘dockerO’, as shown inFigure 4b. An internal network is created when this connection is established, and IP addresses are automatically assigned to each container, enabling communication within this network. However, initially, they cannot communicate outside of this network. Nevertheless, using iptables and Docker’s Network Address Translation (NAT), it is possible to configure the port mapping to allow communication from the container network to the external network(16).

Host: The ‘host’ network allows the container to share the same network namespace as the host. In other words, the container shares all network interfaces of the host without any level of abstraction between them, as shown inFigure 4c. Due to this configuration, this network offers better performance than other networks, as it does not require addressing, port mapping, or NAT for container connections to an external network since the container network is identical to the host network. However, it is important to mention that if two or more containers attempt to use the same port under a host network, conflicts may arise(17).

It is worth mentioning that, according to(18),(19),(20), ‘bridge’ type networks tend to have lower performance than other types of networks. On the other hand, ‘host’ type networks, due to their network characteristics, do not have an abstraction level that limits their performance, as is the case with ‘Bridge’ type networks.

III. METHODOLOGY

This section describes the process and methodology for implementing network services in Docker containers. All files used for deploying the services, such as ‘dockerfiles’ and ‘Docker compose’, along with the employed procedure, are available in(21). The images corresponding to the implemented services are also hosted in the Docker Hub repository in(22).

A. Scenario

The implemented solution is based on Docker Compose and utilizes two Raspberry Pi boards to carry out a multi-container deployment of services. These services constitute a traditional Internet architecture, including DHCP, DNS, FTP, Web, VoIP, and Routing. All of this is achieved through a YAML file. In this approach, one of the Raspberry Pi boards serves as the main host for the containerized services, while the second one is used for remote client connections through the containerized DHCP and Routing services.

In this work, the performance of containerized services is examined in a wired connection, taking into account the delays that a wireless network may introduce. This approach is essential for delay-sensitive services, such as VoIP services, which require delays below 150 ms. A more detailed exploration of this approach is reserved for future work.

B. Physical and logical configurations

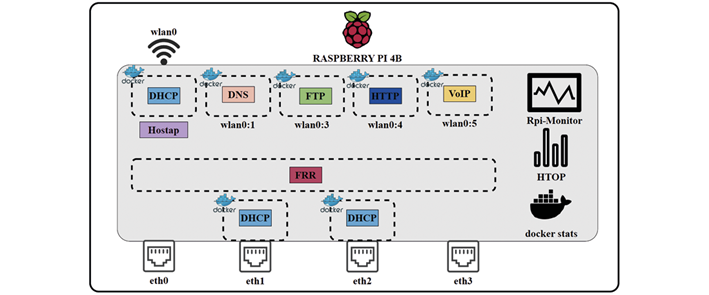

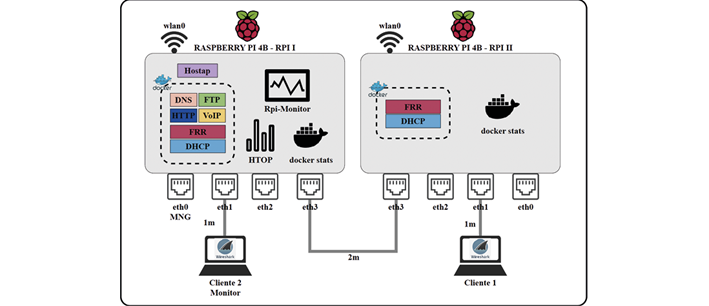

Each Raspberry Pi board uses USB-Ethernet adapters from the Realtek brand, model RTL8152, with up to l00baseT/FullDuplex capacity. This is done to expand the number of physical ports available for the routing service. There are 4 Ethernet interfaces, 1 WLAN interface, and five virtual WLAN interfaces. These interfaces are associated with both an IPv4 address of a containerized service and a monitoring application, as shown inFigure 5, representing the logical distribution of the implementation on the Raspberry Pi board.Figure 6, illustrates the topology for the joint implementation through Docker Compose. The Access Point function is also used on one of the Raspberry Pi boards using Hostap software. This is done to establish a wireless connection for network monitoring. The SSH remote access service installs the board’s dependencies, access, and configuration. This enables wireless access to the equipment’s configuration or through any available Ethernet cable.

C. Base images for docker containers

The base images for Docker containers are based on Alpine Linux, the recommended choice for conserving storage resources in both the resulting images and container instances, as shown in(23). As for service images, Nginx, Asterisk, and FRRouting are already available as dedicated images in the ARM32v7 architecture. Therefore, instead of building these services completely, files based on these images are generated to leverage their functionality.

D. Storage

Two types of volumes are used for storage: Named Volumes and Bind Mounts. Named Volumes are used to maintain the persistence of logs from containerized services and to share them with other containers. On the other hand, bind mounts are used to configure container files directly from the host.

E. Docker networks

Regarding Docker networks, the ‘host’ type network is used exclusively. This choice is based on previous considerations about Docker network performance and aims to optimize the implementation’s performance.

F. Measurement tools, metrics ant testing setup

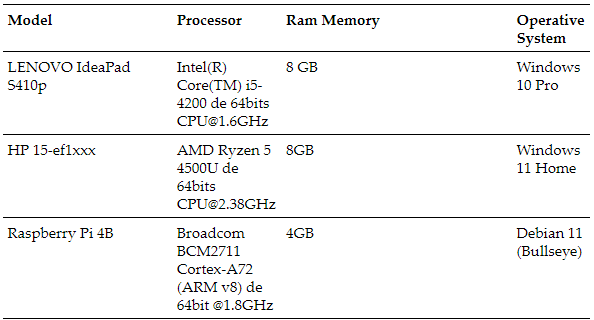

For the performance analysis of containerized services, various general performance parameters are considered, such as CPU usage, memory usage, CPU load average, network traffic in and out, and a physical parameter, CPU temperature, for each containerized service. To perform this task, two computing devices are used to test the services: i) One to evaluate the service’s operation and ii) A second to monitor the host’s performance. Detailed descriptions of these devices can be found inTable I.

Client 2, acting as a monitoring device in the topology shown inFigure 6, connects wirelessly via SSH to collect these metrics using various software tools. Among them is the ‘docker stats’ command from Docker, which measures CPU and memory usage performance, and ‘Htop’ as a tool for visualizing CPU and RAM system resources and processes. Additionally, the RPI-Monitor tool, a monitoring software based on a web interface, is employed for system usage statistics visualization on Raspberry Pi devices. This allows access to performance metric data such as CPU load average, memory usage, and additional parameters like temperature.

Furthermore, it is essential to consider the analysis of services such as VoIP and assess Quality of Service (QoS) parameters like delay and jitter(24), For this purpose, Wireshark software is used on each device inFigure 6, allowing the capture and analysis of data packets based on SIP and RTP protocols. This provides a deeper insight into the quality of real-time communication. For the measurement process, measurements are taken during specific periods for each containerized service, and then the data is tabulated according to the respective measurement period. The data from each service’s test period is used and averaged in the case of results obtained through the RPI-Monitor tool. For data obtained through HTOP and Docker stats, the maximum values for each metric are taken, as visualized in the open SSH terminals during the test period.

G. Service software

The network services implemented in Docker containers are based on Linux servers following a client-server architecture. In this context, each service incorporates a daemon that runs and manages the services according to predefined configuration parameters. Below are the details of each software, its configuration, and the type of implementation in containers:

Domain name resolution service: It uses a server based on the Internet Systems Consortium’s Berkeley Internet Name Domain (Bind9), one of the most popular DNS servers in Linux distributions. This server acts as a master server, locally hosting primary DNS zone records and responding to pre-configured name resolution requests for each containerized service and the monitoring service.

Addressing service: It is based on the Internet Systems Consortium DHCP (ISC DHCP) server, widely used for IP address assignment and network configuration. The implementation follows a basic configuration of IPv4 address assignment to DHCP clients, providing the addresses of the containerized DNS server and the gateway address configured for each of the Raspberry Pi’s Ethernet ports.

File transfer service: It uses the Very Secure File Transfer Protocol (VSFTP) server, which allows secure and efficient file or directory transfers. The implementation uses active mode and supports custom file transfers for configured local users. These users are within a chroot environment containing a 64KB file and 70KB of storage space for file transfers with the FTP client.

Web service: It uses a server based on Nginx, known for its high performance, scalability, and low resource consumption(25). The web service implements a default web server along with two virtual web servers based on Nginx virtual blocks, allowing the hosting of multiple web pages. These pages are accessed along with the aforementioned containerized DNS service.

VoIP service: It is based on the Sangoma Asterisk server, an open-source framework under the GPL license that enables the development of real-time multiprotocol communication applications, such as high-quality voice and video applications. This service implements a PBX server to configure and manage VoIP extensions. Four IP phone station extensions are configured, of which two are used for functional tests between two clients connected to the Raspberry Pi’s Ethernet ports to evaluate the containerized VoIP service without the limitations of wireless connections.

Routing service: It is based on the open-source FRRouting software, providing traditional router functionality. For the current implementation, the OSPF protocol is one of the most widely used routing protocols today. This protocol is the routing system between the Raspberry Pi boards, enabling connection to the services.

IV. RESULTS

A. CPU and Memory Performance Analysis

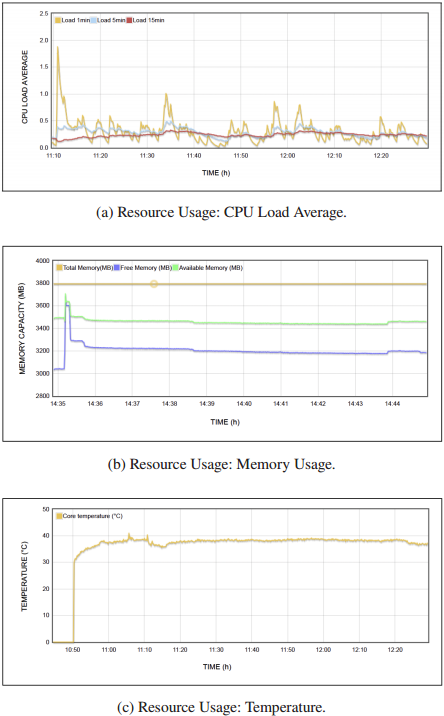

In this section, are presented the results obtained from the implementation using Docker Compose. As initial indicators of the results, data collected through the ‘Rpi-Monitor’ tool are obtained, which display CPU utilization statistics inFigure 7a, memory usage inFigure 7b, and CPU temperature inFigure 7c, over an entire testing interval for each of the containerized services. For the deployment using Docker Compose, the CPU load average indicator starts with an initial value of 1.88 (47.08 %) at the host’s startup. Subsequently, this load decreases to 0.37 (9.25 %). From this point onwards, the network service tests are initiated and divided into sections as detailed below.

Section I (11:30 am - 11:40 am) - Routing: During the connection between the RPI devices, a maximum load value of approximately 1.0 (25 %) can be observed. This value corresponds to the initial OSPF routing process between the devices.

Section II (11:45 am - 11:50 am) - End-to-end Connection: When starting the end-to-end connection tests between the clients. During this period, the maximum load value reached is 0.518 (12.95 %).

Section III (11:52 am - 11:53 am) - Connection to servers: The maximum load reached during the tests for connections to the containerized servers is 0.5 (12.5 %).

Section IV (11:53 am - 11:54 am) - DNS: In this section, the load reaches values of 0.44 (11 %).

Section V (11:59 am - 12:00 pm) - HTTP: The load reached during the access to the HTTP pages obtains a maximum value of 0.863 (21.575 %). Additionally, according to the DNS traffic I/O graphs analysis, the traffic increases considerably during the HTTP testing period and remains elevated after this test.

Section VI (12:00 pm - 12:04 pm) - FTP: During the tests of the 2 FTP connections, maximum load values of 0.801 (20.025 %) are recorded for client 1.

Section VII (12:04 pm - 12:22 pm) - VoIP: During the VoIP tests, it is observed that during the call from extension 2001 to extension 2002, the CPU load reaches its peak, reaching 0.413 (0.33 %). The load remains low for the rest of the calls at an average of 0.180 (4.5 %).

Figure 7bshows the system memory usage, which remains constant as the tests run. The available free memory starts at 3 280 MB during the host’s startup and remains close to 3 176.5 MB. This reflects a constant memory usage of approximately 617.8 MB, concerning a total of 3 794.32 MB of available memory.

InFigure 7cthe CPU temperature can be observed, which goes from 35.95°C at startup and increases to maintain an average temperature of 38.6°C, with a maximum of 39.2°C during the HTTP tests. After the VoIP tests, it drops to 36.7°C.

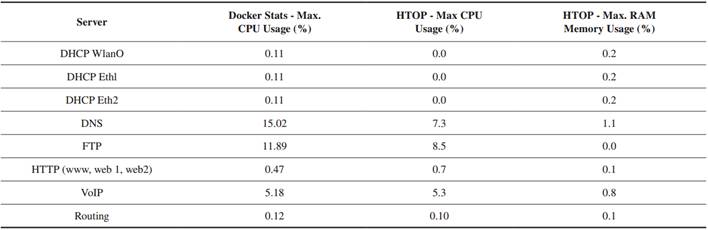

The second performance indicator obtained through the ‘docker stats’ and ‘htop’ tools is detailed inTable II. The DNS service stands out with a maximum CPU usage of 15.02 %, followed by the FTP service with usage of up to 11.89 %, and the VoIP service with 5.18 %. The other containerized services show relatively low values, all below 1 %.

Regarding memory usage, the DNS service also has the highest value, with 1.1 %, followed by the VoIP service, with 0.8 %. It is important to note that these memory values are constant for all services during the Docker Compose implementation.

B. Quality of Service Analysis

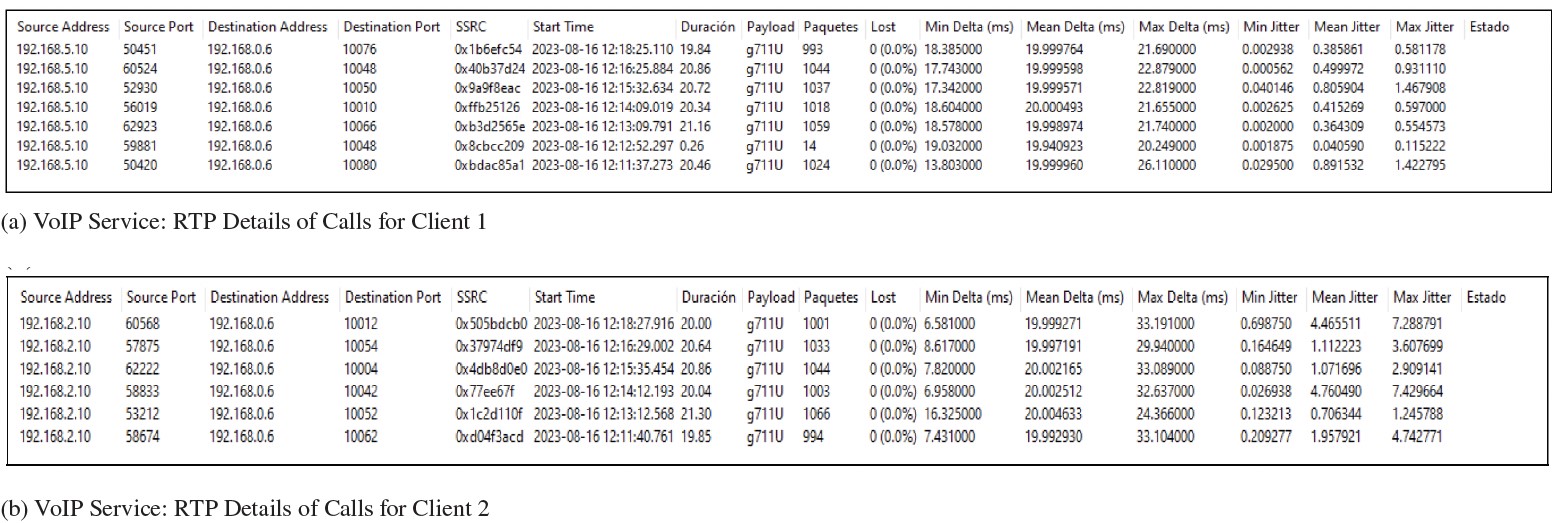

The analysis of RTP traffic provides information about the QoS of the VoIP service, as detailed inFigure 8. The results for VoIP calls are similar to those implemented previously, including:

Payload codec: g711U vocoder.

Packet loss: 0 % packet loss for all calls.

Delays: Minimal delays are recorded, with slightly higher values for client 1 than for client 2. The averages of these values are 18.39 ms for client 1 and 7.63 ms for client 2. The average delay is 19.99 ms for both clients, with maximum delays of 21.74 ms for client 1 and 32.87 ms for client 2.

Jitter: Regarding jitter, values vary significantly for client 2, where they can reach up to 7, while client 1 experiences jitters of up to 1.46.

V. CONCLUTIONS

Implementing network services through containers allows for effective deployment on systems with limited CPU, memory, and storage resources, such as Raspberry Pi boards. As demonstrated in services like HTTP and VoIP, these instances achieved a final product, a web page, or a voice call without significant degradation in service quality and with optimal resource usage. For example, three web pages were loaded without errors in the implementation using Docker CLI for HTTP, with CPU usage as low as 0.47 % and memory consumption as 0.1 %. Regarding the VoIP service, calls were made without distortions, delays, or audio loss, maintaining CPU usage at 5.18 % and memory consumption at 0.8 %.

The implementation of network services through containers has shown minimal resource usage in most cases. Services like DHCP, HTTP, and Routing show zero CPU and memory consumption. In contrast, services like DNS, FTP, and VoIP show high consumption. This can be partly explained by factors such as the volume of request traffic, which, in the case of DNS, was considerably higher than other services. It can also be due to the transfer of information, as in the case of FTP. Additionally, their position in the network architecture should be considered. This is because their location may imply implicit involvement in other services, which, in turn, can increase resource consumption. This is evident in the case of the DNS service when used implicitly to support web services when making domain name resolutions to access a web page.

The architecture of containerized services also significantly influences their performance. This is because the underlying software has a variety of architectures to offer the service. Some services, like DHCP, DNS, and FTP, adopt a simple client-server architecture based on daemons and configuration files. Others, like HTTP, VoIP, and Routing, have more complex architectures with dedicated modules to provide the service. For example, the HTTP service, based on NGINX, which allows for efficient deployment without containerization, highlights the importance of decentralization, even when dealing with a single application. Its structure consists of modules with internal management that favors efficiency. In contrast, services like VoIP and Routing have architectures that can be more challenging regarding management and performance.

Containerizing services provide a high level of scalability, allowing the deployment of multiple containers to provide versatile, flexible, and efficient services. An example is the DHCP service, where multiple containers based on the same image are deployed. This provides a highly flexible addressing service with multiple configurations available for deployment. This achievement is partly due to using environment variables, which allow modifying a containerized service without directly modifying it. This creates a scalable, dynamic, and adaptable service that can be efficiently offered to users, as determined by CPU usage and memory consumption.

Docker is a highly versatile tool that brings significant benefits to the deployment of network services. Its diverse ecosystem encompasses essential plugins, such as storage through volumes and bind mounts. In this context, it has been observed that these mechanisms allow interaction with a container’s file system, making it easier to modify and configure a service without the need to directly access the containerized environment or altogether remove the isolated environment to make changes. Additionally, it has been found that rebuilding containers using volumes is a valuable mechanism for migrating services. An example of this is the use of containers to store configuration logs, as in the case of routing services with ‘daemon’ and ‘zebra.config’ files or in the case of the DHCP service with lease records in ‘dhcpd.lease’. These records allow replicating the same configurations in other containers, ensuring the continuity of the service. However, it is important to note that logs stored via volumes can be prone to corruption. This is because, over time, these files can begin to record incomprehensible strings of characters, affecting the service’s operation.

Another highly versatile tool for service deployment is network controllers through network objects. These networks enable the configuration and modification of service management and communication, providing essential features such as network isolation, traffic control, access, and scalability. This flexibility is evident when implementing services with ‘bridge’-type networks. In this configuration, services were mapped to specific ports to allow external access through the container. As observed, using ports different from the default value for Network Address Translation (NAT) between the container and the external network provided greater isolation and security for the service. It also allowed the incorporation of multiple containers offering the same service but mapped to different ports to receive the service. This is especially useful in services like HTTP, where high scalability is achieved using a different port for each container. However, in services like passive FTP and VoIP, port mapping involves maintaining constant NAT over a range of ports (FTP: for transfer, random port >1 048, and VoIP: audio transmission via RTP, random port between 10 000 and 20 000). In these cases, considering a NAT that covers the entire port range to obtain service connection on a non-specific port was impractical as it affects performance, as mentioned in (19), (20). For this reason, using ‘host’-type networks, which remove the isolation level between containers and share the network with the host, allowed for services with low resource usage but limited scalability.

Regarding the containerized VoIP service, significantly high performance and QoS are observed when using Ethernet cable transmission. This is confirmed through RTP parameters captured with Wireshark, where the average delay of calls is 20 ms, compared to the maximum allowable delay of 150 ms, and maximum Jitter values of 7 ms between both implementations, staying below the maximum allowable of 50 ms. In summary, containerizing VoIP services for end-to-end calls through wired connections does not affect this QoS.